Introduction:

Zuckerberg, the founder of Facebook, once said in his speech that the idea of Facebook’s creation is to create a global community, deepen the connection between people, and allow anyone to share anything (Wagner & Swisher, 2017). But is this idea really realistic? The three major Internet giants in the United States, Facebook, Google and Twitter, have been investigated by the US government for terrorist content. Google is being investigated again by the government for its ability to search for child pornography (Block, 2018). During the government hearing, the Internet giants acknowledged and promised to have a suitable platform content review mechanism, although everyone rhetorically said that it was engineers who passed technical review, plus expert review (Block, 2018). But in fact, the part of manual censorship is not the content experts that the giants say, but the network censors outsourced to the third world. The documentary The Cleaners records the lives of such a group of third-world internet censors. There is such a company in Manila, the Philippines, which specializes in reviewing videos and pictures on Internet platforms. All suspicious pictures and images identified by the automatic monitoring system need to be confirmed by these reviewers. In the footage shot by The Cleaners, the censors keep repeating deletions or ignoring (Block, 2018). That’s all they do. They are the Internet cleaners that are ignored by the world because they are doing everything they do. I can’t say it to the outside world, once it is said that it is like a leak, it will affect the advertising revenue of the Internet giants, and I will also face the risk of being fired. And the content of the daily work of this group of net cleaners is also the reason why it is difficult for them to tell others, they face another world, it is almost a hell on earth, it is all about pornography, violence and terrorism of mental torture.

The Trailer of The Cleaners:

This blog post mentioned that with the development of the Internet, publishing content on the Internet platform is becoming freer, but the content review mechanism of the platform is also facing more difficult challenges. The technical review automation mechanism introduced by the platform has limitations in many aspects, so a large number of human reviewers are required to ensure that the information on the Internet platform is the result of correct screening. But in these censorship work that requires precise, online censors need to face more naked and cruel pictures or videos, and they also suffer unprecedented torture, whether it is physical torture or mental torture.

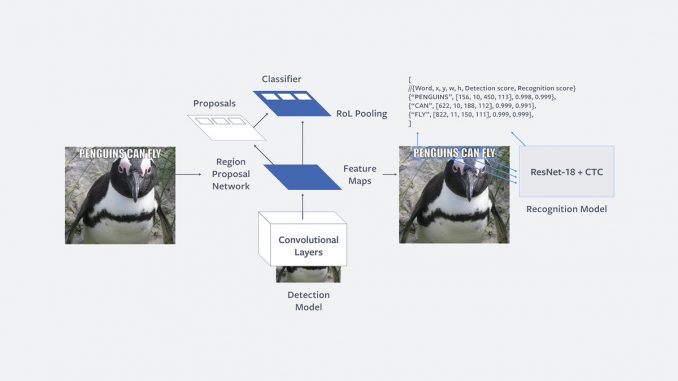

The limitation of automated tools

As these automated tools for content moderation are increasingly adopted by Internet platforms, these tools are limited in many ways during the moderation process (Singh, 2019). The accuracy of a particular automated tool at detecting and removing online content depends largely on the type of content it was trained to handle (Singh, 2019). Developers are able to train and operate tools that focus on specific types of content, such as CSAM and copyright-infringing content, because their error rates are low enough to be widely adopted by platforms large and small (Singh, 2019). This is because there is a large corpus of content for these categories, and tools can be trained and parameters for these categories are specified (Singh, 2019). However, in the case of content such as extremist content and hate speech, there are nuances in the speech associated with different groups and regions, and the context of this content is critical to understanding whether it should be removed. Therefore, developing comprehensive databases for these categories of content is challenging (Singh, 2019). Even more difficult is developing and operating a tool that can be reliably applied across different groups, regions and subtypes of speech. These challenges also limit the reliability and accuracy of review automation tools in identifying content across platforms (Singh, 2019).

First, automated content review tools have certain errors in understanding and reviewing human language contexts, and the accuracy of the reviewed information is also limited (Singh, 2019). Since human speech is not objective and the content moderation process is inherently mechanical, the limitation of these tools is their inability to understand the nuances and changes in context that exist in human speech (Singh, 2019). For example, overly liking someone’s photo or using certain slang terms may be interpreted as being disrespectful to the culture on a certain platform or in a certain region of the world (Singh, 2019). However, these actions and speeches may have completely different meanings on another platform or another community (Singh, 2019). Additionally, automated tools are limited in their ability to gain contextual insights from content (Singh, 2019). For example, image recognition tools can identify instances of nudity in a piece of content, such as breasts. However, it is unlikely that the post is about pornography or possibly breastfeeding, so further human judgment is required. Additionally, automated content moderation tools can quickly become obsolete (Singh, 2019). On Twitter, for example, members of the LGBTQ+ community found a severe lack of search results containing hashtags such as #gay and #bisexual, raising questions about the platform’s censorship (Singh, 2019). After an investigation, the platform said it was due to not updating outdated algorithms in time, erroneously identifying posts with these tags as potentially offensive (Singh, 2019). There are many similar situations, and it also shows that platforms need to constantly update algorithm tools, and the decision-making process of automated tools needs to contextualize whether posts with such hashtags are CRI objectionable (Singh, 2019). However, until now, AI researchers have been unable to construct datasets that are comprehensive enough to account for the vast fluidity and variability of human language and expression (Singh, 2019). As such, these automated tools cannot reliably serve across different cultures and contexts, as they cannot effectively account for the various political, cultural, economic, social, and power dynamics that influence individuals to express themselves and interact with each other (Singh, 2019).

Source from:https://www.theverge.com/2019/2/27/18242724/facebook-moderation-ai-artificial-intelligence-platforms

Second, algorithmic decision-making across industries is biased in automated tools. Decision-making based on automated tools, including in the field of content moderation, may further marginalize and censor groups already facing disproportionate amounts of bias and discrimination both online and offline (Singh, 2019). For example, NLP tools are often used to parse English text. Therefore, tools that are less accurate when parsing non-English text can have detrimental results for non-English speakers, especially for languages that are not prominent on the Internet, as this reduces the comprehensiveness of any corpus on which the model is trained (Singh, 2019). Given that a large number of users of major internet platforms live outside of English-speaking countries, the use of such automated tools in decision-making should be limited when making globally relevant content moderation decisions (Singh, 2019). Therefore, these tools are also ineffective in addressing demographic differences that may result from Language usage differences. Furthermore, researchers have biases about what constitutes hate speech. This can be mitigated by testing for inter coder reliability, but the majority view is unlikely to be overcome (Singh, 2019).

Source from:https://medium.com/microsoftazure/7-amazing-open-source-nlp-tools-to-try-with-notebooks-in-2019-c9eec058d9f1

Finally, and most concerning is the lack of transparency in the algorithmic decisions of the automated tools used to curate content on the platform (Singh, 2019). These algorithms are known as black boxes due to the fact that little is known about how the algorithms are coded, the datasets they train on, how they identify correlations and make decisions, and how reliable and accurate they are (Singh, 2019). Currently, some internet platforms provide limited disclosure regarding the extent to which they use automated tools to detect and remove content on their platforms (Singh, 2019). Furthermore, some researchers argue that transparency does not necessarily lead to accountability in this regard (Singh, 2019). In the broader content moderation space, it is increasingly becoming a best practice for tech companies to publish transparency reports that highlight the scope and volume of content moderation requests they receive, as well as the amount of content they actively remove (Singh, 2019). In this case, transparency around these practices can create accountability around how these platforms manage user expression (Singh, 2019). Moreover, researchers have suggested that looking under the hood of black boxes would yield a large volume of incomprehensible data that would require significant data analysis in order to extract insights, and that companies would not generally provide transparency around how decisions were made (Singh, 2019).

In Need also Brings Harm

Due to the limited reliability and accuracy of content moderation automation tools, large web censors need to contribute to platform content moderation. Although censors go through a variety of relevant training before they are hired, Internet censors responsible for censoring pornographic content need to memorize a variety of sexual codes and terminology, in order to better identify hidden content, and watch a large number of adults videotape (Block, 2018). It was a mental torture for this group of internet censors. In the standards for pornographic content, as long as it involves sexual organ nudity, it will be deleted (Block, 2018). This is a rigid condition, and there is no need to further determine whether it is attributable to pornographic content. Since the Internet is almost a world without thresholds, such standards can avoid harmful information, especially those not suitable for children, on the one hand, and cause damage to important information on the other hand. The determination standard has become a controversial issue (Block, 2018). For example, an artist painted a nude portrait of former US President Trump and uploaded it to the Internet (Bishop, 2018). The Trump in the painting is bloated, but the size of the genitals is very small, in order to satirize that Trump only has slogans but not the strength of the president. But in the author’s view this is the art of expressing opinions. But on the other side of the screen, censors deleted the painting as a personal insult to Trump, and soon after, even the artist’s account was blocked. Other cases are newsworthy by design: a photo of a naked corpse of a child was removed by censors because of the rigid norm that it touches bare sexual organs, but for refugees fleeing Syria, the photo was their message to the whole world (Block, 2018). The world accuses the weapons of the war in Syria. Some NGO members said shocking content was necessary as an indictment of the war. Therefore, when many people in the organization see impactful content on the Internet, the first reaction is to download it first, otherwise it may be gone in a while. The crew needs this evidence to communicate to the world the truth and evil that has happened on this land (Block, 2018).

Source from:https://www.rottentomatoes.com/m/the_cleaners

In addition, the censorship mechanism of online platforms is sometimes affected by the region (Block, 2018). The platform will reach a private agreement with the local government for the operation license in a region, and deal with content with political implications, such as in speech control extremes (Block, 2018). Strict Turkey, some videos that do not violate the platform’s policy, but violate Turkish laws. For this reason, the platform had to deal with these videos and speeches that offend the sensitive points of the Turkish government, and block Turkish users through IP addresses (Block, 2018). Therefore, even the Internet platform itself is difficult to define the standard of judgment. However, platforms often do not know exactly what content is passed or deleted by censors, but it is these people who determine what people see on the Internet and what the world sees for users of the platform (Block, 2018). There is almost no employment threshold for the profession of Internet censors. Because they will see a lot of content that has a strong impact on the spirit, most of the time this work is outsourced to the third world, and it is undertaken by those at the bottom of poor families. With a salary of $1 an hour, 25,000 indicators a day, watching some Internet garbage that poisons people’s spirits. Although the censors’ burden of protecting the pure land of the Internet for hundreds of millions of Internet users makes them feel that the job is very rewarding at first (Block, 2018). But their mental violence and low wages are far from proportional. Studies have shown that watching pornographic and violent videos for a long time is very damaging to the human brain, making people numb and taking it for granted. While outsourcing companies do set up mental health departments, it doesn’t seem to make much of a difference (Block, 2018).

Conclusion

With the rise and development of social media, the accelerating effect of the Internet on conflict has become more and more obvious. Today, the power of social media can influence national politics and ethnic disputes (Block, 2018). People can publish any information on the Internet, and understand the world through fragmented information, which makes it easy for opinions and speeches to flow into emotional expressions. Divide our enemies. Social platforms have become an acceleration tool that fuels and even confuses audition. When hate speech spreads freely on social media platforms and eventually turns into violent conflict, the platform’s censorship mechanism can stop it temporarily, but it is not a long-term solution. And when network censors clean up the network environment, what standard will they use to judge the value of information.

Reference list:

Abele, R. (2018b, November 21). Review: Chilling documentary “The Cleaners” explores the costs of a corporately sanitized Internet. Los Angeles Times.

https://www.latimes.com/entertainment/movies/la-et-mn-the-cleaners-review-20181120-story.html

Block, H. (Director). (2018). The Cleaners. (Documentary). Grifa Filmes, WDR, NDR, rbb, VPRO and I Wonder Pictures

Bishop, B. (2018, January 21). The Cleaners is a riveting documentary about how social media might be ruining the world. The Verge.

https://www.theverge.com/2018/1/21/16916380/sundance-2018-the-cleaners-movie-review-facebook-google-twitter

Singh, S. (2019). Everything in Moderation. New America.

Wagner, K., & Swisher, K. (2017, February 16). Read Mark Zuckerberg’s full 6,000-word letter on Facebook’s global ambitions. Vox.

https://www.vox.com/2017/2/16/14640460/mark-zuckerberg-facebook-manifesto-letter