Introduction

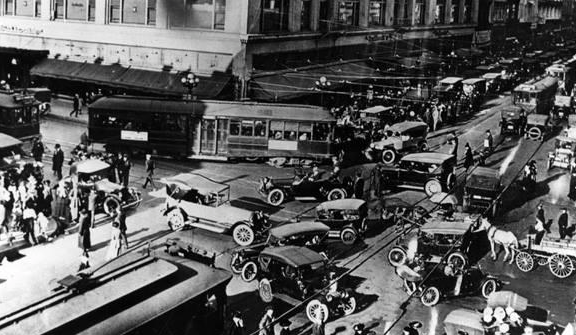

Imagine you are walking through an intersection in the heart of the New York, yet there has no traffic lights. Pedestrians and vehicles intermingle in a confusing and dangerous way. It may sound a bit unbelievable, but in fact, we are all experiencing this everyday, I mean, on the internet.

Different disciplines have different emphases on the definition of “algorithm”. In computer science, it refers as “a finite sequence of well-defined instructions, typically used to solve a class of specific problems or to perform a computation” (Merriam-Webster, 2020). While social scientist Gillespie argues that “algorithm are encoded procedures for solving a problem by transforming input data into a desired output” (Gillespie, 2014). But they all point to what one algorithm represents: the rules.

Like traffic lights, algorithms determine the classification, prioritisation and operation of all data on the Internet. However, we are in the same digital world today like we were in the 1860s, when cars were already speeding down the roads, and traffic lights had not yet been introduced. As we embrace new great inventions, we are also paying for management and governance that are far behind. Hate speech, filter bubble etc. are all linked to disorderly algorithms. This blog will focus on two major issues of the algorithm: opacity and thoughtless and calls for the need to regulate the algorithm.

The debate on algorithms

To be honest, I myself have gone through a period of change in attitude towards algorithm, which probably reflects two opinions about it. Before that, I kept an open mind about algorithm. I saw algorithms as nothing more than a new technological tool that was good or bad depending on how people used it. It is like the flame in the hands of a humanoid, it can be used for warmth or for destruction.

It is obvious that algorithms have huge advantages and value in physical areas, such as manufacturing, logistics and medicine. I think that even in the mental realm where algorithms are entering, such as social media and the art industry, algorithms can go some way to stimulating a more “concrete” or “accurate” understanding of what culture really is.

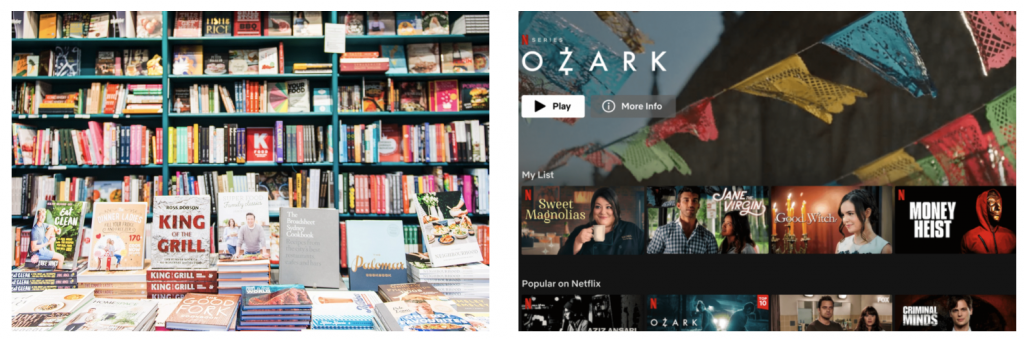

Furthermore, I do not believe that algorithms are much of a revolutionary thing, especially in the field of culture. For example, the algorithmic systems on streaming companies that have been criticised by many people. I questioned what is the essential difference between the “algorithms” on Netflix and the “audience questionnaires” of the traditional TV company. At their core, they both collect information and reviews, analyse it, and ultimately guide the creation and distribution of content. As for recommendation systems, do we really have more “freedom of choice” than streaming platforms when we walk into a bookshop, pick a book from the ‘Romance novels’ shelf, and then drawn to another book that the staff have placed next to it? More bluntly, is the logic of this bookshop display really less “black box” than “algorithm”?

However, as I studied and read during this unit, I found two unavoidable problems with the current algorithm system: opacity and thoughtless. These two issues are key to responding to my doubt and are the petri dish for problems that breed hate speech, algorithmic discrimination, etc. Also coupled with the fact that algorithms are being applied on an extremely large scale without limit, this scale effect indicates the necessity and urgency of algorithmic governance.

The opacity of algorithm

The initial criticism of the algorithm is its opacity. Pasquale (2015) describes algorithms as the black box, characterised by the fact that everything on the internet is recorded, and the uses and consequences of this data are not known. Furthermore, there are three forms of opacity of the algorithm (Burrell, 2019): Opacity as intentional corporate or state secrecy; Opacity as technical illiteracy; Opacity as the way algorithms operate at the scale of application.

Firstly, the opacity of the algorithm is due to the need for companies to maintain a competitive advantage. Many internet companies use their unique algorithms as a core technological advantage, such as Google’s search accuracy and Facebook’s precision advertising system. This is often protected as a form of intellectual property. In addition, as a means of combating “phishing” and “spamming”, algorithms can pose a serious threat to online services if they are made public (Sandvig et al., 2014). However, scholars (Pasquale, 2015; Burrell, 2019) sharply point out that this rhetoric may be a cover for entrepreneurs to avoid or confuse regulation.

The second form of opacity stems from the specialised and unique nature of the algorithm language. Software engineers emphasise the simplicity, accuracy, and comprehensibility of language, as the aim is for machines to understand instructions efficiently. This creates a language that is very different from human language, thus increasing opacity (Burrell, 2019). The other form of opacity is due to the reality of building algorithms: a set of algorithms is often the joint product of multiple teams and disciplines. This means that it is difficult to understand an algorithmic system, even for insiders (Sandvig et al., 2014), let alone to audit the code.

A prime example of the opacity of algorithms is the famous “crash of 2:45”, when the entire US stock market plunged by 9% in five minutes at 2:45 on 6 May 2010. Until now, there seems to be no conclusive agreement as to what caused this roughly one trillion dollars to disappear. Some believe it was human market manipulation (Brush et al., 2015), some say it was a massive trading order (Phillips, 2010), and others say it was a technical glitch (Flood, 2010). The causes may be mixed and multi-layered, though they all deeply correlate to the heart behind stock trading, the algorithm. At that time, 70% of stock trading was driven by algorithms, whereas in 2004, all trading was undertaken by humans (Salmon & Stokes, 2010). The FBI and CFTC’s conclusions on this incident are: “It suggests that existing algorithms are not just dumb enough to give spoofers some of their money, but dumb enough to give spoofers so much of their money that they destabilize the financial markets” (Levine, 2015).

Similarly, in Noble’s analysis (2018) of how the algorithm behind the search engine enhances racial discrimination, because of Google’s strict secrecy about its algorithm, means that Noble cannot directly analyse its code to understand how the algorithm facilitates the emergence and discovery of information on the web, but can only speculate about its architecture.

The algorithmic process is inevitably flawed, but its opacity greatly reduces the space and scope for negotiation, as well as accountability (Alkhatib & Bernstein, 2019 & Noble, 2018). Flaws, discrimination, and value ranking in the algorithmic system are hidden in boxes. Hence, if the algorithm is not fully governed and supervised, it is bound to pose further threats to the society.

The thoughtlessness of the current algorithms

The power of algorithms is expanding from the physical to the cultural realm, which is bringing more discussion to it. It’s easy to quantify a parcel, but is it feasible to quantify a work of art? Like the Kevin Slavin (2011), a “physics of culture”.

In 2016, Netflix launched a competition to award $1 million to the first team or individual that could improve the accuracy of the company’s recommendation system by 10%. The competition attracted 50,000 entrants from 186 countries, as well as coverage from major news outlets. The campaign then became a “living experiment” to reveal how algorithms influence culture, and “algorithmic culture”.

Algorithmic culture could be defined as “provisionally, the use of computational processes to sort, classify, and hierarchize people, places, objects, and ideas, and also the habits of thought, conduct, and expression that arise in relationship to those processes” (Striphas, 2012). A question that arises is: Is it rigorous to datafy cultural works in terms of numbers or data? That is to say, can 5 stars on a website be used as a measure of an artwork?

In the case of the Netflix Prize, a film called Napoleon Dynamite struggled with many algorithm developers. The film had very polarised reviews, either 5 stars (the best) or 1 star (the worst), with almost no other type of rating. The winner of the competition offered this solution to this: an anchoring effect, i.e. a user who has seen 3 average movies in a row (say, Pirates of the Caribbean) and then one slightly better movie (say, Jurassic Park) is likely to give this last movie a full 5 stars. If they had seen terrible movies before (say, The Twilight Saga), they would have given Jurassic Park a 3-4 star rating. Then the winning team just roughly worked these kind of patterns into their algorithmic model to improve the accuracy of algorithmic systems (Thompson, 2008). So, is this the answer that the algorithm gives to such a polarised film? At least, for the programmers, this formula was satisfactory enough to win the competition. At the moment, 80% of watched content is based on algorithmic recommendations on Netflix (Chong, 2020).

This raises another question: who should decide on the rules of the algorithm? We have already mentioned that algorithms have made their way higher and higher into all areas of human life, yet these areas are so varied.

Especially for social issues, it is crucial who decides on the process of their full responsibility (Just & Latzer, 2017). In the days of traditional mass media, the media was known as the fourth power, the “gatekeeper” of information, and was professionally trained (McQuail, 2010). However, the engineers of several technology companies, which have become de facto media giants, use their disciplinary agendas and assumptions to decide which news is worthy of being disseminated to the wider public (Hallinan & Striphas, 2014). Conversely, it’s like when we want to create a rule for traffic lights, and we get a couple of talented musicians who graduated from Berklee College of Music. (Okay, that doesn’t sound so bad). But, is this what we want for the future of humanity, for algorithmic engineers, with data and metrics at their core, to be as important arbiters of our culture as critics of art and journalism?

Conclusion

In this blog, I present what I consider to be the two most central issues in the debate on algorithms: opacity and thoughtlessness. These two flaws are the key to a host of problems that have followed on the Internet. It is clear that algorithms are significantly influencing production and consumption in the cultural sphere, and that the algorithms that this makes are a crucial and strategic element of the information society(Just & Latzer, 2016). As such, the design and implementation of algorithms needs to be further regulated and controlled, and algorithm companies require to be held accountable to the public.

Before the giant truck of algorithms rolls onto the streets of a traffic-light-free New York, we must be prepared to respond. But just as traffic laws cannot be established by car companies alone, systematic governance of algorithms cannot rely solely on the conscious efforts of technology companies, but also requires NGOs and the state to form a platform governance triangle (Gorwa, 2019b). Now, it is time to set up traffic lights for algorithms.

References

Alkhatib, A., & Bernstein, M. (2019). Street-level algorithms: A theory at the gaps between policy and decisions. In Proceedings of the 2019 CHI conference on human factors in computing systems (CHI ’19), Glasgow, Scotland, UK, May 2019 (pp. 1–13). Association for Computing Machinery.

Brush, S., Schoenberg, T., Ring, S. (2015, April 22). How a Mystery Trader with an Algorithm May Have Caused the Flash Crash. Bloomberg News. https://www.bloomberg.com/news/articles/2015-04-22/mystery-trader-armed-with-algorithms-rewrites-flash-crash-story

Burrell, J. (2016). How the machine ‘thinks’: Understanding opacity in machine learning algorithms. Big Data & Society, 3(1), 2053951715622512.

Chong, D. (2020). Deep dive into netflix’s recommender system. Medium. https://towardsdatascience.com/deep-dive-into-netflixs-recommender-system-341806ae3b48

Flood, J. (2010 August 24,). NYSE Confirms Price Reporting Delays That Contributed to the Flash Crash. https://web.archive.org/web/20100827154851/http://assetinternational.com/ai5000/channel/NEWSMAKERS/NYSE_Confirms_Price_Reporting_Delays_That_Contributed_to_the_Flash_Crash.html

Gillespie, T. (2014). The relevance of algorithms. In T. Gillespie, T. Boczkowski, & K. Foot (Eds.), Media technologies: Essays on communication, materiality, and society (pp. 167–194). The MIT Press.

Gorwa, R. (2019). What is platform governance?. Information, Communication & Society, 22(6), 854-871.

Just, N., & Latzer, M. (2017). Governance by algorithms: reality construction by algorithmic selection on the Internet. Media, culture & society, 39(2), 238-258.

Levine, M. (2015 April 22). Guy Trading at Home Caused the Flash Crash. Bloomberg. https://www.bloomberg.com/opinion/articles/2015-04-21/guy-trading-at-home-caused-the-flash-crash

McQuail, D. (2010). McQuail’s mass communication theory. Sage publications. Merriam-Webster Dictionary. (2022, April 5). Definition of ALGORITHM. https://www.merriam-webster.com/dictionary/algorithm

Noble, S. U. (2018). Algorithms of oppression. New York University Press.

Pasquale, F. (2015). The Black Box Society: The Secret Algorithms That Control Money and Information. Harvard University Press. http://www.jstor.org/stable/j.ctt13x0hch

Phillips, M. (2010, May 7). Accenture’s Flash Crash: What’s an “Intermarket Sweep Order”. WSJ. https://www.wsj.com/articles/BL-MB-21819

Salmon, F., Stokes, J. (2010, Dec 27). Algorithms Take Control of Wall Street. WIRED. https://www.wired.com/2010/12/ff-ai-flashtrading/

Sandvig, C., Hamilton, K., Karahalios, K. (2014) Auditing algorithms: Research methods for detecting discrimination on internet platforms. In: Annual Meeting of the International Communication Association, Seattle, WA, pp. 1–23.

Slavin, K. (2011). How algorithms shape our world. TED Talks. https://www.ted.com/talks/kevin_slavin_how_algorithms_shape_our_world?language=en

Striphas, T. (2012). What is an Algorithm. Culture Digitally.

Thompson, C. (2008). If you liked this, you’re sure to love that. The New York Times, https://www.nytimes.com/2008/11/23/magazine/23Netflix-t.html