Introduction

With the rapid development of technology, the Internet has become one of the most common and popular communication tools. The Internet provides a low-barrier platform that allows people to express their thoughts and opinions anytime and anywhere freely. However, since the Internet obtained users from various backgrounds and cultures, online hate speech seems inevitable. Hate speech hurts or stirs up violence against specific groups of people with features such as gender, race, and sexual orientation (Parekh, 2012, as cited in Flew, 2021, p.90). The power of words can’t be underestimated, and online hate speech can strongly influence the mental health of victims and result in terrible consequences.

This blog will focus on the online hate speech against the LGBTQ community, and discuss their experiences in the current online environment with consequences. Then, this blog will explore the possible causes of online anti-LGBTQ hate speech, and introduce a common governance method – content moderation in both good and bad aspects. The term LGBTQ refers to lesbian, gay, bisexual, transgender, and queer/questioning.

The current situation

Overall background

On social media platforms, the volume of speech from LGBTQ people has been increasing in recent years, and many LGBTQ influencers have become popular such as Jeffree Star. However, on the other hand, anti-LGBTQ hate speech also occurred frequently on these platforms. GLAAD – a non-profit organisation that works for protecting the LGBTQ community, published a social media safety index report based on research on 5 leading social media platforms: Facebook, Twitter, YouTube, Instagram, and TikTok.

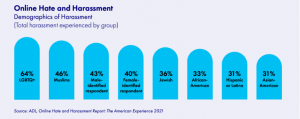

(Figure: ADL, 2021, as cited in GLAAD, 2021)

This report reveals the situation of the LGBTQ community on social media platforms. 64% of LGBTQ people have experienced online hate and harassment, which exceeds other identity groups, and Facebook has the highest proportion of leading social media platforms with 75% (GLAAD, 2021). Also, people who speak non-English languages tend to experience more assaults, because the content moderation in the online English environment is much more well-formed (Wepukhulu, 2022). Therefore, LGBTQ people are facing an uncomfortable environment while using social media apps.

Examples of online hate speech

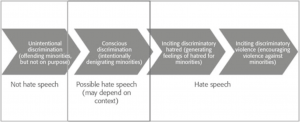

(Figure: Cortese, 2006, as cited in Flew, 2021)

Referring to the figure, hate speech has a continuous process with different levels of discriminatory speech. And the statements of the former Finnish interior minister are between conscious discrimination and inciting discriminatory hatred. According to BBC News (“Paivi Rasanen”, 2022), former Finnish interior minister Paivi Rasanen posted tweets against gay people with quotations from Bible, which treats homosexual acts as shameful. Paivi Rasanen was intentionally denigrating minorities because she was trying to impact people to discriminate against the minority of LGBTQ. And the quotations she used from Bible might incite hatred toward LGBTQ people since it would generate feelings from parts of Christian.

Review the hate speech to LGBTQ people, hate groups frequently used misinformation and disinformation as weapons with the following actions outlined by GLAAD (2021).

- Trolling

- Keyword squatting

- Impersonation (e.g. Fake profiles)

- Doxing

- Viral sloganeering

- Memes

For example, Family Watch International was attacking the LGBTQ community through false claims, such as assuming LGBTQ people are likely to engage in pedophilia and take drugs (Wepukhulu, 2022). The list of anti-LGBTQ online hate speech records similar disinformation, hate groups created a false term of LGBTP that P stands for pedosexual, and they spread this term with viral sloganeering (GLAAD, n.d.).

Parekh (2012, as cited in Flew, 2021) identified the main features of hate speech:

- It directly against individuals or groups of individuals that are easily identifiable.

- It stigmatises a group by imposing highly undesirable qualities on them.

- It leads target groups to be hostile legitimately and lose society’s trust.

In upon example, hate groups were directly imposing highly undesirable qualities of pedophilia and addiction on the LGBTQ community. And the objective is to stigmatise LGBTQ people to make society against them as a legitimate object of hostility.

Consequences

People unfamiliar with online hate speech might think it would not result in serious consequences. Nevertheless, hate speech can result in short-term and long-term influences on mental health because LGBTQ victims will fear anyone who might assault them, making them wish to escape from society and social events (Ștefăniță & Buf, 2021).

Besides that, public violence is also connected with online hate speech because social media platforms blur the distinction between online and offline communities (Carlson & Frazer, 2018). Conform to the data sourced in WABE news (Diaz, 2021), at least 44 transgender or gender non-conforming people suffered violence that ended in shot or death in 2020. It’s hard to say that public violence is the consequence of online hate speech because hate speech itself usually doesn’t incite public violence (Flew, 2021). Still, these two elements would symmetrically affect each other.

The causes of online hate speech

Anonymous and high-speed spread

After decades of development, social media platforms are now functioning as social infrastructures, which expanded the scope of the circulation of speech (Flew, 2021). Many people use social media platforms to express their opinions, comments, and thoughts. So, the problem also comes with popularising social media apps because tolerance and hate would be easily spread through people’s expressions and interactions (Keen & Georgescu, 2014, cited in Flew, 2021). And social media apps supply platforms for online hate speech with low-cost and high-speed dissemination mechanisms, because hate groups can escape from taking responsibility through anonymous posts (Ștefăniță & Buf, 2021).

For example, the hate speech posted by Paivi Rasanen was spread globally through Twitter and resulted in charges from Finland’s state prosecutor (“Paivi Rasanen”, 2022). Twitter, as an international social media platform, form an environment for hate speech to deliver to many users, some of the audiences would be affected by those hate speech and believe the claims. Therefore, Paivi Rasanen’s statement can inspire some audiences to post hate comments and influence more audiences like a never-ending chain. Although she expressed hate speech in her real name, more social media users post comments anonymously. In fact, hate speech and anonymity have a positive correlation, and a higher proportion of hate speech on sexual orientation is anonymous than other features (Ștefăniță & Buf, 2021).

Algorithms

The social media platforms build channels for users to assemble people with the same attitudes through algorithms, and hate groups do the same thing. For example, the TikTok algorithm became famous because of the accuracy of its recommendation, and some users think that its algorithm can detect detailed personal preferences in a few hours (Smith, 2021). Therefore, once users post speech against the LGBTQ community, the algorithm will likely classify the interest and recommend more anti-LGBTQ posts. And the recommendation of algorithms leads users to form hate groups, which will result in toxic culture against the LGBTQ community.

The overlapping point of social media platforms is to sustain users through recommendation accuracy, however it also means that platforms keep users in single perspectives like an ‘information silo’ (GLAAD, 2021). And the process of developing algorithms is designed by platforms’ standards, knowledge, and goals, so bias or discrimination against LGBTQ people exist inevitably in algorithms. The culture, politics, and the design of platforms formed the toxic culture, which encourages hate groups to focus on their toxic culture and implicitly prevents them from thinking objectively (Massanari, 2017). Hence, the single perspective brought with platforms leads hate groups to be isolated from truths, which would cause them to spread hate speech with false cognition.

Content moderation

Content moderation is a common governance form adopted by leading social media platforms (Roberts, 2019). It has helped reduce online hate speech against LGBTQ people, but this option still has shortages in actual implementation. Content moderation for social media platforms refers to commercial content moderation, which is the organised practice of reviewing posts before these materials are submitted or after they are uploaded (Roberts, 2019). However, content moderation is highly dependent on the policies or standards of platforms. Based on the research on YouTube, it didn’t remove hateful materials as soon as it removed materials that infringed copyrights (House of Commons Home Affairs Committee, 2017). Therefore, although content moderation is appropriate for eliminating online hate speech against LGBTQ people, the implementation on platforms is complicated.

The implementation of content moderation has two ways to supervise content: AI technologies and content moderators. AI technologies moderation can filter issues of content automatically but is limited, because it only has the capability to distinguish materials that are already in its database (Roberts, 2019). Hence, AI content moderation only can identify hate speech that has been collected before, it’s hard to analyse new misinformation or obscured hate speech. Also, the number of user-generated content on leading social media platforms is huge, and even algorithms can’t handle the rapidly growing posts (Roberts, 2019).

Besides that, the materials collected in the database might be misused on LGBTQ people’s speech instead of accurately eliminating hate speech. As results found by Lux and Mess (2019, cited in Dias Oliva et al., 2020), Facebook restricted the use of the words “dyke” (lesbian), “fag” (gay), and “tranny” (transgender) because these words were collected in algorithm by mistake, this restriction of words limited self-expression of LGBTQ people. Therefore, machine-automated moderation on content is not well-developed currently, it even works oppositely for the LGBTQ community. So, most platforms hire content moderators to review hate speech flagged by users (Roberts, 2019), which leads hate speech to exist longer on social media platforms because moderators need more time.

Another problem is that content moderators are challenged to review every post carefully, and they also have their understanding of hate speech which would lead some hate speech to be passed. If the content moderator is non-LGBTQ and not familiar with LGBTQ culture, then the moderation has possibilities to be decided inappropriately. For that problem, GLAAD (2021) has suggestions in its report that encourage social media platforms to hire more LGBTQ content moderators and train all content moderators about the demands of LGBTQ people. Although content moderation can restrain online hate speech, it still requires improvements to cover the lack of governance.

Conclusion

Online hate speech against LGBTQ people is an important issue for social media platforms since 64% of LGBTQ people have experienced hate speech and harassment. The hate groups against the LGBTQ community through the main tactics of trolling, keyword squatting, impersonation, doxing, viral sloganeering, and memes. They also spread misinformation and disinformation to stigmatise LGBTQ people to shift public attitudes negatively. Online hate speech can easily influence the mental health of LGBTQ people, and they might even be afraid of society and social events. Therefore, online hate speech can directly or indirectly affect the acceptance of LGBTQ identities by the public.

Moreover, the formation of online hate speech is related to social media platforms. Because platforms supply the possibility of commenting anonymously, which is positively relevant to the amount of hate speech on sexual orientation. Also, through algorithms, hate groups can reach more accomplices with increased coverage on social media platforms. Besides forming hate groups, algorithms also caused users’ single perspective, which made anti-LGBTQ people sink into false cognition. Social media platforms should be responsible for that because algorithms are designed on their rules, and their content moderation is hard to eliminate all the negative results. The machine-automated content moderation lack in process complicated hate speech with millions of new posts, and the content moderators can’t sufficiently understand the demands of the LGBTQ community. Therefore, improving AI technologies for content moderation, hiring more LGBTQ moderators, and training all content moderators in LGBTQ culture would be helpful to solve the issue of online hate speech against LGBTQ in future.

References

Carlson, B. & Frazer, R. (2018). Social Media Mob: Being Indigenous Online, Macquarie University, Sydney.

Diaz, J. (2021, May 10). Social Media Hate Speech, Harassment ‘Significant Problem’ For LGBTQ Users: Report. WABE. Retrieved from https://www.wabe.org/social-media-hate-speech-harassment-significant-problem-for-lgbtq-users-report/

Dias Oliva, T., Antonialli, D. M., & Gomes, A. (2020). Fighting Hate Speech, Silencing Drag Queens? Artificial Intelligence in Content Moderation and Risks to LGBTQ Voices Online. Sexuality & Culture, 25(2), 705. https://doi.org/10.1007/s12119-020-09790-w

Flew, T. (2021). Issue of Concern. Regulating Platforms (pp. 91-93). Cambridge: Polity

GLAAD. (n.d.). Listing of Anti-LGBTQ Online Hate Speech. Retrieved April 7, 2022, from https://www.glaad.org/hate-speech-listing

GLAAD. (2021). Social Media Safety Index. Retrieved from https://www.glaad.org/sites/default/files/images/2021-05/GLAAD%20SOCIAL%20MEDIA%20SAFETY%20INDEX_0.pdf

House of Commons Home Affairs Committee. (2017). Hate Crime: Abuse, Hate and Extremism Online (No. HC609). London: House of Commons.

Massanari, A. (2017). #Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 336–337. https://doi.org/10.1177/1461444815608807

Paivi Rasanen: Finnish MP in Bible hate speech trail. (2022, January 24). BBC news. Retrieved from https://www.bbc.com/news/world-europe-60111140

Roberts, S. (2019). Understanding Commercial Content Moderation. Behind the Screen: Content Moderation in the Shadows of Social Media (pp. 33-35). New Haven: Yale University Press. https://doi-org.ezproxy.library.sydney.edu.au/10.12987/9780300245318

Smith, B. (2021, December 5). How TikTok Reads Your Mind. The New York Times. Retrieved from https://www.nytimes.com/2021/12/05/business/media/tiktok-algorithm.html

Ștefăniță, & Buf, D.-M. (2021). Hate Speech in Social Media and Its Effects on the LGBT Community: A Review of the Current Research. Revista Română de Comunicare Şi Relaţii Publice, 23(1), 49–51. https://doi.org/10.21018/rjcpr.2021.1.322

Wepukhulu, K. S. (2022, January 18). Google, Facebook and Amazon turn blind eye to anti-gay disinformation. openDemocracy. Retrieved from https://www.opendemocracy.net/en/5050/google-facebook-amazon-turn-blind-eye-anti-gay-disinformation/