Introduction

User information leakage events have been common in the current big data era. As a representative event of user information disclosure, the “Facebook data leak” incident has caused serious and bad consequences and attracted extensive international attention. After the Facebook scandal, although people always care about whether their privacy will be stolen by the Internet, in real life, people don’t even know what the problems in the event are. For example, people don’t know the definition and content of privacy, privacy transaction (monetized data analysis service), or the consequences of data information leakage (predicting and shaping behavior), etc. By associating the Facebook leak with these emerging thinking vulnerabilities, it will be more convenient for us to think deeply about privacy and data protection.

“Facebook data leak” incident

On March 17, 2018, the Guardian and the New York Times jointly published an in-depth report which revealed that Cambridge Analytica improperly accessed the data of more than 50 million users on Facebook. The company used the data to target ads during the 2016 U.S. presidential election to influence the outcome.

(Source: https://www.horizont.net/tech/nachrichten/Cambridge-Analytica-Chef-Nix-Facebook-Likes-spielen-in-unserem-Modell-keine-Rolle-144847)

In a message to the Washington Post on March 17, Facebook said, “We reject any allegations that we have violated the settlement order. We properly respect people’s right to privacy. Privacy and data protection are the basis of every decision we make.” In its official statement, Facebook insisted that Aleksandr Kogan, a Cambridge analytics and app developer, abused user data.

In July 2019, Facebook reached a settlement with the Federal Trade Commission (FTC) and imposed a fine of up to $5 billion for Cambridge’s privacy disclosure scandal in 2018. The sky-high fines make the “Facebook data leak” incident particularly noticeable. Ironically, Facebook’s share price rose 1.81% after the news of accepting the fine, and the company’s market value increased by $10.4 billion. The profit gained far exceeded the amount of the fine.

On October 18, 2019, Interbrand, a brand consulting agency, released the “2019 global best brand report”, and Facebook fell out of the top 10. (Interbrand, 2019). In a subsequent interview, Daniel Binns, CEO of Interbrand’s New York office, mentioned Facebook’s lack of user privacy and security measures and said that this has dramatically affected consumers’ choices.

The distance between privacy and us

(Source: https://mediaindia.eu/society/right-to-privacy-in-a-modern-democracy/)

Understanding of the right to privacy is that factual personal information has the right to be controlled by themselves and decide whether personal data is known by others. Privacy right is a fundamental human right, which is also called “the right to be let alone.”

The right to privacy has been taken to be an inherent human right, albeit one that is qualified in practice by other competing rights, duties, and norms. (Flew, 2021)

We can think that privacy is based on information protection in the case of information exchange. If there is no exchange, privacy is meaningless. Any form and content of information exchange have the opportunity to be tortured by the sender of the information whether it contains the privacy part. If there is no possibility of data flowing out from any channel, this information may not be called privacy. Because this information will not spread at all.

The research of privacy can start from two aspects: one is personal identity information, and the other is non-personal identity information. In short, personal identity information needs the user’s approval before it can be used by the network platform. For example, when you search the running app in the Google search engine, you can easily find that the right side provides relevant and accurate application recommendations. But in fact, there is no login to Google’s account at this time, and the search algorithm benefits Internet companies. Search engines will continue to narrow the scope of recommendations with users’ search elements and clicks. Such precise recommendations involve privacy transactions.

(Source: https://www.google.com/search?q=running+app&oq=running+app&aqs=chrome..69i57.8309j0j15&sourceid=chrome&ie=UTF-8)

Unsatisfactory privacy rights transactions in the online world

(Source: https://referandearnapps.com/what-are-the-3-best-social-media-platforms-for-digital-marketing/)

When people browse websites, use apps, and do online shopping, they will subconsciously and directly agree to the online disclaimer or behavior tracking service consent that pops up in the middle of the screen. Few people will carefully read those lengthy laws, regulations, and platform terms. Because if users disagree, these Internet entities often prevent users from enjoying relevant products or services.

Users are required to provide personal information as a condition for obtaining various online products and services and the ability of content aggregators such as search engines and social media platforms to resell these data to third parties. In the event of Facebook, users are required to use email to bind the Facebook platform for user registration. This part of the leaked information was obtained by Cambridge analysis, even though Facebook insisted on not admitting to selling this part of the data. Capital pursues profits. Regardless of ethical and legal issues, it seems difficult to share data free of charge as a benefit-oriented company.

The blurring of the lines between the plethora of Information asymmetry between service providers and consumers, the binding nature of consent clauses, and personal information and information “identified” through algorithms, customer profiles, and online advertising. (Australian Competition and Consumer Commission, 2018)

These information asymmetries make the platform or website the owner of the data set. The dataset owner can easily monetize the dataset through algorithms and provide services to advertisers and third parties. The vast data set enables Internet companies to predict individuals and groups.

(Source: https://m.weibo.cn/6339103442/4755752814646289)

Not only does Facebook have a model of data prediction behavior, but also Weibo, a well-known social media platform in China. Here, advertising users can purchase the relevant data set of “keyword search” for quantitative analysis, trying to capture users’ behavior. Cosmetics companies search for corresponding information about potential customers by buying relevant data from the keyword “whitening essence.”

By analyzing the gender and age stage of search users, the company can determine the core target population of product publicity and obtain the product pricing strategy. By analyzing users’ regional information and obtaining the focus of publicity strategy and even competitive product information at different prices. By analyzing the content types forwarded by likes collection. The analyzed users do not know what happened. Importantly, everything that appears on a user’s search page may have been purposefully placed and displayed.

The dilemma exposed by the Facebook incident

Technology has always been ahead of the changes in the laws and regulations. The growth of Internet capital has outpaced the growth of public awareness and law. (Zuboff, 2015) When the monitoring system led by digital platform companies loses industry self-discipline, it will undoubtedly have bad consequences.

1. The dilemma of corporate reputation being questioned by the public

The Facebook data leak incident not only caused Facebook to receive high fines but also lost the trust of the public. Many users said they were disappointed with Facebook and would consider reducing or no longer using Facebook, a social platform. Facebook is losing users’ “hearts.” Affected by this, Facebook’s market value evaporated by $36 billion, its share price fell 7% on Monday (March 19, 2018), fell more than 6% on Tuesday, and finally closed down to 2.3%. Social media is known as the flag bearer of Trump’s entry into the White House, and the decline of individual stocks such as Facebook affected the stock market at that time.

2. User behavior is affected by the dilemma of monitoring

After the Facebook incident, users are constantly worried about whether their information is not in a safe state and whether their behavior is affected by purposeful information. As Cambridge Analytica boasts, “it can develop the psychological characteristics of consumers and voters, and then use this” secret weapon “to influence the wishes of consumers and voters, which is more effective than traditional advertising.”

(Source: https://ritholtz.com/2016/05/10-cognitive-biases-that-affect-your-everyday-decisions)

By translating behavioral data into “machine intelligence,” predictive products are fabricated. And then later, by adjusting and cajoling and Crowd psychology, to achieve economic goals. Machines will shape human behavior from what we know to what we will do in the future. (Zuboff, 2019) Repeated information input does affect people’s thoughts and behaviors.

3. The dilemma of users’ personal and property being threatened

The disclosure of users’ personal information will have an adverse impact on users’ personal property. More and more citizens’ personal information has become the focus of criminals, from directly selling information for illegal profits to using the information to engage in criminal activities such as telecommunications fraud, illegal debt collection, and even kidnapping and extortion. Criminals collect people’s leaked privacy through various channels, screen and analyze user characteristics, and carry out the precision crime.

4. Political dilemma and credibility of the Internet

When a data analysis report can help the capital class select a national political leader, the disclosure of Facebook customer information has evolved into a deep-seated problem. Through the twin sisters of big data and cloud computing, people see the magic scene of capital surpassing and manipulating politics. As the soil for capital and politics to take root, the people are very disappointed with the trend of capital manipulation and political development. At the same time, it strikes at the subjective initiative of people’s participation in politics – freedom and being controlled. The root of the pain is that no one can be completely independent of the Internet and politics.

However, it is precisely because the impact of Facebook customer information disclosure is so bad that it has attracted significant attention from the public and the national level, formed a forced mechanism, promoted Internet companies to establish an effective mechanism to protect users’ privacy, strengthened industry self-discipline, improved Internet privacy protection legislation and established a complete and effective regulatory mechanism in various countries, and individuals also began to enhance their awareness of information protection in the network.

Understanding of privacy protection

(Source: https://www.childcareeindhoven.nl/en/privacy-statement)

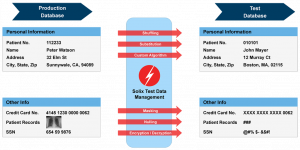

Privacy protection is divided into two main aspects in data science, privacy protection, and Privacy data masking. The former is more about how users treat privacy in their daily life. For example, the privacy is encrypted to ensure that the communication content is not discovered; Or access channels can be controlled so that irrelevant people can’t get information by setting encryption properties such as keys. The latter protects privacy in a distorted and irreversible way so that the masking information cannot be associated with the data subject. By deleting part of the information, Replace information with pseudo-anonymous; By adding noise, splitting privacy, and adding more content, privacy cannot be associated with the data subject. Dynamic Privacy data masking is the core of privacy protection, compared to other privacy protection methods we are more familiar with, such as password setting. Privacy can be protected to a greater extent, and the right to “own privacy” can be guaranteed by dynamically processing information that cannot be associated with the data subject. For example, we use TikTok to browse short videos, but owner ByteDance cannot monitor user preferences and behavior.

Of course, this is ideal.

(Source: https://www.solix.com/data-management-solutions/data-masking)

Finally, it is worth considering whether the problem of personal information on the Internet cannot be solved because we have not yet established a convincing low-cost standard for the courts to enforce corporate implementation. Quantitative index consistency, automatic protocol mapping, etc., across platforms and ecosystems. And how to handle data masking in a shared state in the era of big data. It is whether different platforms (such as Google and Tencent) can match each other after conversion under the same metrics (such as user usage time).

There is still privacy after data masking, but a little less. Partial information is moved out and published to the recipient, which does not mean the recipient has the right to relay it uncontrolled.

Conclusion

A leak caused by technical loopholes reveals not only the simple problems but also the difficulties of dynamic supervision, self-discipline of the entire Internet industry, and the protection of personal information. As you can see from Facebook events, the fundamental purpose of privacy protection is to share in a controlled state under data masking. While there are industrial regulations recognized by Internet companies, users, and regulatory authorities, personal privacy will be better properly and efficiently protected with the authority of law.

References

Australian Competition and Consumer Commission. (2018). Digital platforms inquiry – Preliminary report. In Australian Competition and Consumer Commission. Australian Competition and Consumer Commission. https://www.accc.gov.au/system/files/Digital%20platforms%20inquiry.pdf

Flew, T. (2021). Regulating Platforms. Polity Press Polity Press. https://www.booktopia.com.au/regulating-platforms-terry-flew/ebook/9781509537099.html

Goggin. (2017). Digital Rights in Australia. The University of Sydney. https://ses.library.usyd.edu.au/handle/2123/17587

Interbrand. (2019). Best Global Brands 2019: Iconic Moves. In Interbrand. Interbrand. https://interbrand.com/thinking/best-global-brands-2019-download/

Nissenbaum, H. (2009). Privacy in context: Technology, policy, and the integrity of social life. Stanford University Press.

Zuboff, S. (2015). Big other: Surveillance capitalism and the prospects of an information civilization. Journal of Information Technology, 30(1), 75–89.

Zuboff, S. (2019). The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York: Public Affairs.