Introduction

Do you often feel that today’s online environment is very different from that of the past? The information age has seen a constant change in the form of internet platforms. The amount of information that people can access on the Internet has skyrocketed, from a lack of resources in the past to information overload today, On the one hand, people have access to a huge variety of different kinds of information, and the amount of information is growing every day as everyone can post as much as they like on the Internet. On the other hand, it has become more difficult than ever to find useful and valuable information in the face of the vast amount of information available.

In this context, the birth of algorithms has significantly changed the rules of the Internet. Algorithms is a process or set of rules to be followed in calculations or other problem-solving operations, in other words, algorithms are problem-solving mechanisms. The use of algorithms by humans can be divided into many types according to their function, and they are often used in information recommendation mechanisms on the Internet to tailor information recommendations to each individual. (Just, 2016) and they are widely used as a recommendation mechanism for information on the online platform, replacing human selection. So what is the impact of algorithmic decision-making on the Internet? Are algorithms better than people?

Algorithms are more efficient than humans

The recommendation algorithm helps each individual to find the information that better suits online user when faced with a large amount of information on the Internet. Recommendation algorithms generally analyse what each user is likely to like based on a certain sampling of information and behaviour. The famous Chinese news software, Today’s Headlines, announced in 2018 how their algorithm works, where they tag users’ interests by the content they have clicked on, and tailor content to their interests by combining their occupation, age, gender and environmental characteristics.(People.cn, 2018) Computer-based algorithms can find the exact information the user needs from a large amount of data based on the different needs of each individual, But such a workload is difficult to achieve by human beings, who are also unable to achieve the precision of a machine.

So how efficient is the recommendation algorithm? The fact that the algorithms of major technology companies continue to improve has had a significant impact on the efficiency of their recommendations, and for many companies, recommendation algorithms are even the core technology that allows them to make a profit. One example is that the Netflix Recommendation Engine ( NRE ), which is one of the most successful algorithms in online market, consists of an algorithm that filters content based on each user’s profile. The engine uses 1,300 recommendation clusters to filter over 3,000 titles at a time based on user preferences. Because the high level of accurate of NRE that 80% of Netflix viewer activity is driven by the engine’s personalised recommendations. It is estimated that NRE saves Netflix over $1 billion per year.(Springboard India, 2019)

Figure1:Netflix use their NRE saves over $1 billion per year.

This shows that algorithmic decision making has started to play an important role on the web platform and that it has indeed become one of the most effective means of dealing with the huge amount of data in our modern society, which making a significant contribution to the development of the internet.

Are we trapped in an algorithm?

Although algorithmic can bring more customised options to people. However, there are two sides to everything. We are at risk of being limited by technology. As algorithms are further optimised, they become a ‘tool’ for some apps to accommodate users’ preferences in order to enhance their stickiness, constantly guessing and recommending content that matches their position and preferences. When people are habitually guided by their own interests, it is easy to fall into an “information cocoon”. Sunstaine (2006) pointed out that in information dissemination, because the public’s own information needs are not all-encompassing, the public only pays attention to what it chooses and the areas of communication that please it, and over time, shackles itself in a ‘cocoon’ like a silkworm cocoon.

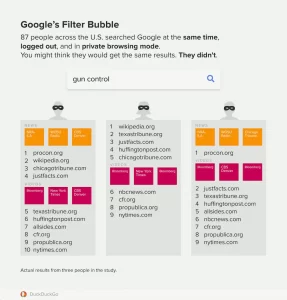

This view is even more evident in today’s internet algorithmic recommendations, with technology companies such as Facebook, Google and others relying on algorithmic recommendations to capture and provide users with the information they want to see in their feeds and search results, which often results in users not being able to take in a diverse range of information and leads to extremes in the content they end up being exposed to. Eli Pariser (2011)refers to this phenomenon of algorithms leading to restricted access to information by users as a filter bubble.

Figure 2: Google’s Filter bubble example

How much do filter bubbles affect people? Although the idea of filter bubbles has been on the radar for a long time, it has not been proven how much of an impact they have.How much do filter bubbles affect people? The most popular arguments about filter bubbles on the internet seem to be news related to politics, with the most typical examples of people being led to extreme ideas by filter bubbles during the US elections and the UK’s exit from the EU. It is clear that in areas related to political news, algorithms seem to be more likely to lead people to extremes.(Fs, n.d.)

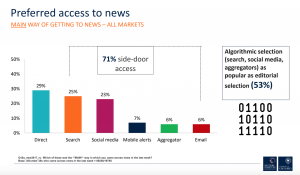

However, according to Dr Richard Fletcher’s experimental controls(Fletcher, 2020), the effect of filter bubbles on the diversity of information sources is still debatable.Their research found that the more people used direct news sources, the less diverse their news consumption became. Social media, on the other hand, not only helps people to be exposed to a wider range of news sources, but also allows them to see different news sources, which will increasing the diversity of their news consumption.

Figure 3: Nearly 71% of people do not get information directly from the news

Filter bubbles have a significantly smaller impact on non-political information. So even though filter bubbles appear to have an impact on people’s information selection, the reasons behind them are still worth analysing. Is it true that news about political categories relies entirely on algorithmic recommendations? It is important to understand that algorithms are still machines that people can control, and that any human action can shift the blame to the algorithm. One difficulty here is that most companies’ algorithmic mechanisms are not open and transparent, and they can artificially change the algorithm to suit their own purposes, so regulating the algorithm will be a huge challenge.

Our online culture is already being challenged

While the impact of filter bubbles on the diversity of information recommendations is debatable, the impact of filter bubbles on forms of online culture is clear. Tiktok, one of the most popular online platforms of the moment, has had a remarkable impact on online culture. The Tiktok platform, which mainly uses short videos to disseminate information, is rapidly gaining popularity around the world at a viral rate, and Tiktok’s algorithmic recommendation mechanism for short videos is increasingly being learned by other companies. However, we can see a peculiar cultural trend becoming increasingly apparent due to the fact that algorithmic recommendations evolve according to the user’s interests.

According to Gavin Allen, a lecturer in digital journalism at Cardiff University, many formal news sites cannot be integrated on the Tiktok platform, where users are not concerned with the content of the news itself, but more with whether the presentation is funny and silly. What are the characteristics of the influence of algorithmic recommendation mechanisms and filter bubbles on popular culture? Chen Lijuan (Chen, 2021) conducted a sampling experiment on Tiktok based on popular recommendations, and after analyzing the characteristics of the content, she found that the content on Tiktok tends to be increasingly homogenized and entertaining due to the influence of filtering bubbles. The majority of the content on Tiktok is characterised by intimacy, gags, slurs and vulgarity.

Figure4:Indian media reports students influenced by Tiktok to do illegal things

Due to the filter bubble and the information cocoon mechanism, the form of cultural content starts to become more and more homogeneous, the cheesy and funny videos seem to be more in line with the needs of the public, the algorithms understand this and will increasingly distribute resources to these areas, thus leading to our online culture starts to become more and more cheesy, homogeneous and lacking diversity.

How should we view the impact of algorithms?

In general, algorithmic decision-making is a natural choice while our technology development , and the day the Internet became globally accessible, we were destined to face this challenge – how to process large amounts of data and information efficiently and quickly. Algorithms have indeed contributed a great deal to the technological progress of mankind, but it is clear that we are also being backed up by technology. Our society is very complex and we cannot predict whether the impact of algorithms on us will lead us to a better society or a worse one. So how should we think about algorithmic decision-making?

Firstly, It is important to ensure that algorithms should be used for the right ways and not used to do unethical things for profit. As mentioned earlier, the algorithm is only a tool we use and how we use it remains in our hands. In the absence of direct evidence that filter bubbles are currently harmful to the population, we should create oversight over their use rather than allowing them to become an impenetrable black box. We should ensure that those who use algorithms do not use them for their own political gain, to steer public opinion, and that they do not invade user privacy. We need more discipline regarding the use of algorithms.

Besides that, We should not rely solely on algorithms, as the platform more rely on algorithmic decision-making, the more likely it is that the audience will fall into a filter bubble. For example, there is still a big difference between Tiktok users and Google Engine users. For some influential platforms, both forms of algorithmic decision making and user decision making should be present. Users should have the right to choose whether to watch information recommended by an algorithm or on a public platform.

Figure5:Cartoon of filter bubble from Media “The paper”

Finally, this article advocates that technology companies should take their social responsibility and that it is because algorithmic decisions have such an impact on society and culture that companies should be care of use of this powerful tool. Algorithms can bring huge profits to companies, and the carnival of commerce can either give the greatest encouragement to quality content or become an “amplifier” or “accelerator” for bad behaviour. The infusion of huge commercial capital makes it difficult for some users to resist the lure of money and continue to engage in bottomless spoofing and vulgar hype for the sake of gaining attention. Therefore, the commercial forces behind the platforms need to shift from production to leadership, guiding users to reduce the production of cheap and interesting content and encouraging quality content producers to continue to create. This is because their creations will directly determine the values of young people, the social climate and our culture.

Reference List

Cass R., S. (1994). Infotopia: How Many Minds Produce Knowledge by Sunstein, Cass R. (2008): aa: Amazon.com: Books.

Chen, L. (2021, March). Interpreting the communication themes and presentation qualities of Jitterbug short videos. Jiangsu Journalists Association. http://www.jssjx.com.cn/cmdg/202103/t20210322_2750716.shtml

Duckduckgo. (2018). Google’s filter bubbles. https://www.wired.com/story/study-revives-debate-about-googles-filter-bubbles/

Fletcher, R. (2020). The truth behind filter bubbles: Bursting some myths. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/news/truth-behind-filter-bubbles-bursting-some-myths

Fs. (n.d.). How Filter Bubbles Distort Reality: Everything You Need to Know. https://fs.blog/filter-bubbles/

Just, N. (2016). Just_Latzer2016_Governance_by_Algorithms_Reality_Construction.

Pariser, E. (n.d.). The Filter Bubble. Retrieved April 7, 2022, from https://books.google.com.sg/books/about/The_Filter_Bubble.html?id=-FWO0puw3nYC&redir_esc=y

PASQUALE, F. (2015). The Black Box Society: The Secret Algorithms That Control Money and Information. https://www-jstor-org.ezproxy.library.sydney.edu.au/stable/j.ctt13x0hch

People.cn. (2018, January 16). Today’s headlines officially disclose the principle of recommendation algorithm to the public. http://it.people.com.cn/n1/2018/0116/c196085-29768256.html

Picture from Do filter bubbles really exist? Here’s a study that defies common sense. (n.d.). Retrieved April 7, 2022, from https://www.thepaper.cn/newsDetail_forward_8579080

Pictures of Indian students attacking local residents for a shake-down. (n.d.). Retrieved April 7, 2022, from https://www.fromgeek.com/vendor/254439.html

Preferred access to news. (n.d.). Retrieved April 7, 2022, from https://reutersinstitute.politics.ox.ac.uk/news/truth-behind-filter-bubbles-bursting-some-myths

Springboard India. (2019a, November 5). How Netflix’s Recommendation Engine Works? – Springboard India. Medium. https://medium.com/@springboard_ind/how-netflixs-recommendation-engine-works-bd1ee381bf81

Springboard India. (2019b). Netflix picture. https://medium.com/@springboard_ind/how-netflixs-recommendation-engine-works-bd1ee381bf81