Online hate speech: victim blaming in Tinder Swindler event

Yufei Lu

UniKey: yulu6025

Student ID: 520053245

Introduction: the instance and the definition

With the example Tinder Swindler event, this blog will discuss the content of online hate speech, its harms, and the approaches we may conduct to prevent its negative effects.

The Tinder Swindler event and online victim blaming

Netflix’s new documentary The Tinder Swindler reports an Internet romance fraud case. A fraud called Simon Leviev displayed his wasteful life on a dating app, Tinder (The Tinder Swindler, n.d.). After building up relationships with matched female users in this app, he pretended to be under threat by business competitors and requested money from these users to maintain his luxurious illusion (The Tinder Swindler, n.d.).

Fig. 1. The poster of this Netflix documentary (The Tinder Swindler, n.d.)

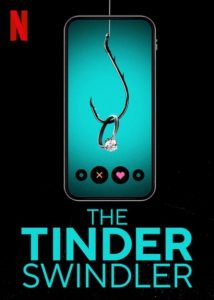

In this documentary, victims Cecilie and Pernilla demonstrated the online blaming they experienced after the media revealed this event, including insulting words such as “gold digger” (The Tinder Swindler, n.d.). Furthermore, after this Netflix documentary was released, there was also blaming towards victims on Twitter, some of which have even received thousands of “likes” (to prevent the spread of impolite content, I only chose one tweet of them). Users blaming these victims think these girls are too foolish to be frauded, date the fraud for his money, and deserve the loss (The Tinder Swindler, n.d.).

Cecilie has expressed her confused feeling when seeing these blaming comments; she felt annoyed but tried to keep an optimistic mentality (Clarke-Billings, 2022).

Fig. 2. Victim blaming tweet screenshot (Https://Twitter.com/Lazaruskumi/Status/1490654304096731140, n.d.).

Online hate speech and Online victim blaming

We can consider online hate speech as the negative description of users with specific attributes on social network platforms (Parekh, 2012, as cited in Flew, 2021).

Online victim blaming is a kind of online hate speech, meaning negative comments on the Internet towards the victim of specific events (Jane, 2017). These negative comments generally believe that the victims should be responsible for the harm caused by the perpetrator. Online victim blaming does not only happen on social media platforms such as Facebook, Reddit, and Twitter. The space of these contents is more general, including the reviewing or discussion zone of movie rating websites, news websites, and personal blogs.

Body: harms, sources, and solutions

The harms of online victim blaming

The harmful outcome of victim blaming reflects in several aspects. As for victims themselves, the harm may merge from two factors. Firstly, it may harm users’ mental and physical fitness (Jane, 2017). In this way, possible diseases such as depression may merge (ELSA International, n.d.). According to research conducted by eSafety, about 37 percent of hate speech victims have suffered spiritual pressure in Australia (eSafety Commissioner, n.d.). On the one hand, the victim, Cecilie, needs to face the depression of losing confidence in love. On the other hand, she must pay the bill for the loans she borrowed to give Simon Leviev money (Clarke-Billings, 2022). After being scammed, she has already lived a hard life; extra blaming discourse may make her feel worse. Another victim, Pernilla, admitted that scam cases were concerned with suicide accidents (Clarke-Billings, 2022).

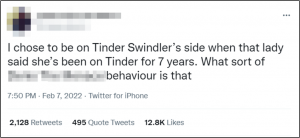

As for the social media platform, online hate speech could also bring harm. If a platform cannot make effective decisions on hate speech management or restrict these speeches, the online environment of the platform may turn unfriendly to users. Users may find it hard to communicate friendly and express their thoughts on this platform. As a result, the Internet corporate may lose users or customers, which may cause economic damage to the platform. For instance, in the replying area of a Reddit blog blaming the victims of the Tinder Swindler event with over 2000 “Likes,” while some replies standing on the victims’ side have only received less than 5 “Likes” (The Victims of “the Tinder Swindler” Were Not Brave, n.d.). In this way, users supporting victims may lose trust in the forum and leave the platform, which leads to the platform’s loss of potential customers.

![]()

Fig. 3. Blaming blog with more than 2000 “Likes” (The Victims of “the Tinder Swindler” Were Not Brave, n.d.).

Fig. 4. Supporting victim discourse with 4 “Likes” (The Victims of “the Tinder Swindler” Were Not Brave, n.d.).

The reasons for online victim blaming

Online victim blaming can result from multiple aspects, including social bias and prejudice and the form to spread information on the Internet.

Both types of users with appropriate and inappropriate thoughts can take advantage of social platforms (Matamoros-Fernández, 2017). In this way, if users inherently have biases or stereotypes towards a particular group of people or individuals, this bias may be transported online by these users. As for our case, the Tinder Swindler event, these online insulting contents seem similar to traditional news or film reviews, but they were transferred to the online space. Victim blaming comes from incomplete recognition of the role of victims and generates from various aspects; people tend to imagine a purely weak victim with perfect morality; when reality differs from imagination, blaming may merge (The Canadian Resource Centre for Victims of Crime, 2009). From these users’ attitudes, if they have an intrinsic bias towards females or envy towards the rich, they may have a kind of shadowy satisfaction after learning the story that girls were frauded by a pretended wealthy man.

The second reason for online hate speech is from the platform. On the one hand, the algorithm may recommend similar content to users by analyzing their attitudes and interests (Nguyen et al., 2014). After that, the recommended content might become gradually more radical. Then the bias, discrimination, and corresponding hate discourses may merge. On the other hand, the methods of platform management might be a significant element. In Reddit, the lack of platform interference towards inappropriate content could result in an atmosphere with a bias towards females (Massanari, 2016). Similarly, if Twitter does not remove blame for victims of the Tinder Swindler event, these harmful contents will possibly negatively affect more users and lead to an unfriendly online environment for victims of fraud cases. As the speed of information spread is fast on Internet, abusive discourse can spread widely in a short time without intervention.

How to solve the problem

Currently, we roughly have three types of management methods: to give the right to the authorities, to give the right to the platform, and to give the right to users.

-

The government

The first possible solution can be to give the right to the authorities, which means the government can constitute and conduct regulations or laws to prevent the happening of these events and apply corresponding punishment when they happen. The government should work on online hate speech management and victim protection for victim blaming prevention.

To deal with online hate speech, Online Safety Bill is a try. However, for the Online Safety Bill, BBC has expressed worries about the “over deletion” of information by platforms as well as the potential new spread forms of prohibited information (“Online Safety Bill: Harmful and Illegal Content Could Evade New Laws, MPs Warn,” 2022). It reflects the delicate balance between strict laws and loose laws. If the rules are dramatically strict, users might become afraid of publishing their thoughts and expressing themselves because of the scare of punishment. On the contrary, if the rules are too loose, some indirect and underlying racism or anti-feminist content may avoid being identified as hate speech. As for victim protection, Cecilie said victims need support from the law and the government; they have tried to convince governments in multiple countries to focus on victims (Nast, 2022).

-

The platform

The second option is to give the right to the platform. The platform may apply multiple approaches to detecting and processing potential hate speech. Some platforms have written attitudes towards blaming discourse in their rules. For example, Facebook regulates that victim blaming is prohibited (Hate Speech | Transparency Center, n.d.).

As for the detection, AI is an effective method for content classification and detection to find blaming content. For example, Facebook is trying to identify some content as harmful or offensive by using algorithms (Allan, 2017). Though AI can handle and process a large amount of data in a short time, it can make mistakes and even lead to new hate speech or online discrimination. For instance, in 2021, Facebook misclassified a video about a black man with an inappropriate label; Facebook has identified this event as an error from AI and has re-evaluated its algorithm and labeling system (Mac, 2021). Besides a technical error, this misclassification may raise new online hate speech such as discrimination based on race. For example, users could exchange memes based on this event for humor. However, these memes represent abusive content based on race under cover of humor. Spreading memes can contribute to online discrimination.

To solve the problem, software engineers need to refine the algorithm by developing new edge-cutting technologies and communicating with algorithm designers or machine learning researchers with various backgrounds and experiences. As for victim protection, the platform should learn more about victim blaming and try to improve the ability to detect blaming content.

-

The Users

The third option is to give the management rights to users. In the context of online victim blaming, victims should use the Internet as a tool to express their feelings and thoughts.

Pernilla said most victims of scams would not tell their experiences to others out of guilt, but Cecilie encouraged victims like her to speak out and let the public know their experiences (Nast, 2022). Speaking and expressing on the Internet is vital. By posting their experiences online, victims can remind others not to be frauded in similar situations. Besides, speaking of victims is a method to let the public know the feeling and thoughts of victims. Learning experiences of victims could be helpful to let the public understand more details and change their stereotypes towards victims. In Australia, about 70 percent of users believe everyone is responsible for managing online hate speech (eSafety Commissioner, n.d.). As for victim blaming in the Tinder Swindler event, everyone should make an effort to deal with blaming information and keep a friendly online communication environment.

Conclusion

Online platforms bring us space to communicate; simultaneously, discrimination, and blaming towards victims in certain kinds of cases can also merge on the Internet. We refer to this kind of content as online victim blaming. Online victim blaming is a kind of online hate speech. These contents harm the victims themselves, other users on the platform, and the overall online atmosphere. These comments may result from users’ intrinsic bias and prejudice, the filter bubble going from the recommend system, and the fast information spread. To solve the problem, we need to find a balance to distribute the management right to the authorities, the platform, and the users.

In short, it is our responsibility to maintain a healthy and friendly online environment. It is significant not only for the development of the Internet but also for society. As the influencer Bella Mackie said, online users should stop blaming the victims of the Tinder swindler event and to care about their feelings, as we do not know when unfortunate things will happen to us (Mackie, 2022).

References

Allan, R. (2017, June 27). Hard Questions: Who Should Decide What Is Hate Speech in an Online Global Community? About Facebook. https://about.fb.com/news/2017/06/hard-questions-hate-speech/

Chaudhary, M., Saxena, C., & Meng, H. (2021). Countering Online Hate Speech: An NLP Perspective. ArXiv:2109.02941 [Cs]. https://arxiv.org/abs/2109.02941

Clarke-Billings, L. (2022, February 12). “Tinder Swindler conned us out of £260k – we’re traumatised but won’t give up.” OK! Magazine. https://www.ok.co.uk/lifestyle/tinder-swindler-netflix-simon-women-26202677

ELSA International. (n.d.). Online Hate Speech: Hate or Crime?

eSafety Commissioner. (n.d.). Online hate speech. ESafety Commissioner. https://www.esafety.gov.au/research/online-hate-speech

Flew, T. (2021). Regulating Platforms. Polity Press.

Hate Speech | Transparency Center. (n.d.). Transparency.fb.com. https://transparency.fb.com/en-gb/policies/community-standards/hate-speech/

How to report abusive behavior on Twitter | Twitter Help. (n.d.). Help.twitter.com. Retrieved April 7, 2022, from https://help.twitter.com/en/safety-and-security/report-abusive-behavior#:~:text=Navigate%20to%20the%20Tweet%20you

https://twitter.com/lazaruskumi/status/1490654304096731140. (n.d.). Twitter. Retrieved April 6, 2022, from https://twitter.com/LazarusKumi/status/1490654304096731140?ref_src=twsrc%5Etfw%7Ctwcamp%5Etweetembed%7Ctwterm%5E1490654304096731140%7Ctwgr%5E%7Ctwcon%5Es1_&ref_url=https%3A%2F%2Fwww.pedestrian.tv%2Fentertainment%2Ftinder-swindler-victim-blaming%2F

Jane, E. A. (2017). Gendered cyberhate, victim-blaming, and why the internet is more like driving a car on a road than being naked in the snow. Cybercrime and Its Victims. https://doi.org/10.4324/9781315637198

Mac, R. (2021, September 3). Facebook Apologizes After A.I. Puts “Primates” Label on Video of Black Men. The New York Times. https://www.nytimes.com/2021/09/03/technology/facebook-ai-race-primates.html

Mackie, B. (2022, February 13). The Tinder Swindler’s Victims Were Neither Stupid Nor Gold Diggers. British Vogue. https://www.vogue.co.uk/arts-and-lifestyle/article/the-tinder-swindler

Massanari, A. (2016). #Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3), 329–346. https://doi.org/10.1177/1461444815608807

Matamoros-Fernández, A. (2017). Platformed racism: the mediation and circulation of an Australian race-based controversy on Twitter, Facebook and YouTube. Information, Communication & Society, 20(6), 930–946. https://doi.org/10.1080/1369118x.2017.1293130

Nast, C. (2022, February 4). Cecilie Fjellhøy and Pernilla Sjoholm on getting conned by The Tinder Swindler. British GQ. https://www.gq-magazine.co.uk/culture/article/the-tinder-swindler-netflix

Nguyen, T. T., Hui, P.-M., Harper, F. M., Terveen, L., & Konstan, J. A. (2014). Exploring the filter bubble. Proceedings of the 23rd International Conference on World Wide Web – WWW ’14. https://doi.org/10.1145/2566486.2568012

Online Safety Bill: Harmful and illegal content could evade new laws, MPs warn. (2022, January 24). BBC News. https://www.bbc.com/news/uk-politics-60103156

The Canadian Resource Centre for Victims of Crime. (2009). The Canadian Resource Centre for Victims of Crime Centre canadien de ressources pour les victimes de crimes. https://crcvc.ca/docs/victim_blaming.pdf

The Tinder Swindler. (n.d.). Netflix Media Center. https://media.netflix.com/en/only-on-netflix/81254340

The victims of “The Tinder Swindler” were not brave. (n.d.). https://www.reddit.com/r/unpopularopinion/comments/sw72o3/the_victims_of_the_tinder_swindler_were_not_brave/