Introduction

We live in an era surrounded by intelligent algorithms. When you search for a keyword, the search engine calculates which results to show you. When you log on to a video site, the system suggests some of your favorite shows. When you open a news application, it decides what information to update you with(Pasquale, 2015), and technological advances have led them to break through the boundaries of past use and into deeper decision-making, and have greatly facilitated our daily lives, entertainment and consumption. And, as an application of mathematical rationality, one would expect the algorithm to be absolutely objective, but in fact, it can not only be wrong, but can even create serious discrimination problems. Algorithmic discrimination is the use of artificial intelligence(AI) in the process of the algorithm through a series of calculations to harm the basic rights of citizens, against the public ethics of a rule or the formulation of the algorithm itself is set by the unfair rules. With the popularity of AI technology in various fields, algorithmic discrimination is highlighted in the following categories: identity discrimination, such as gender discrimination, racial discrimination, appearance discrimination, etc., and price discrimination. Furthermore, it has its special aspects compared with human discrimination. For one thing, algorithmic discrimination is more accurate. Human discrimination is usually based on visible features such as gender and education, but algorithms can dig out deeper invisible features as the basis of discrimination treatment, including web browsing records, shopping records, driving routes, etc. Therefore, there is almost no possibility for users who are labeled as discriminators to escape. Second, algorithmic discrimination is more one-sided. Human society’s judgment of individuals is usually comprehensive and dynamic, while algorithms cannot access or process all the data of users. Third, algorithmic discrimination is more insidious. Algorithms can easily circumvent racial and gender discrimination, which are explicitly prohibited by law. In the absence of algorithmic disclosure and the right to know is not guaranteed, it is difficult for users to discover the existence of algorithmic discrimination on their own. Therefore, this blog will specifically explore the classification of algorithmic discrimination and analyze the reasons for their formation through four case studies.

What Exactly Causes Algorithmic Discrimination?

Existing studies usually attribute the causes of algorithmic discrimination to the defects of the data itself and the defects of the algorithm technology(E O President et al., 2016), but with the increasing diversity and complexity of the phenomenon of algorithmic discrimination, the causes of algorithmic discrimination have become more diverse. According to the different causes, algorithmic discrimination is mainly classified into four categories:

Algorithm discrimination due to designer bias.

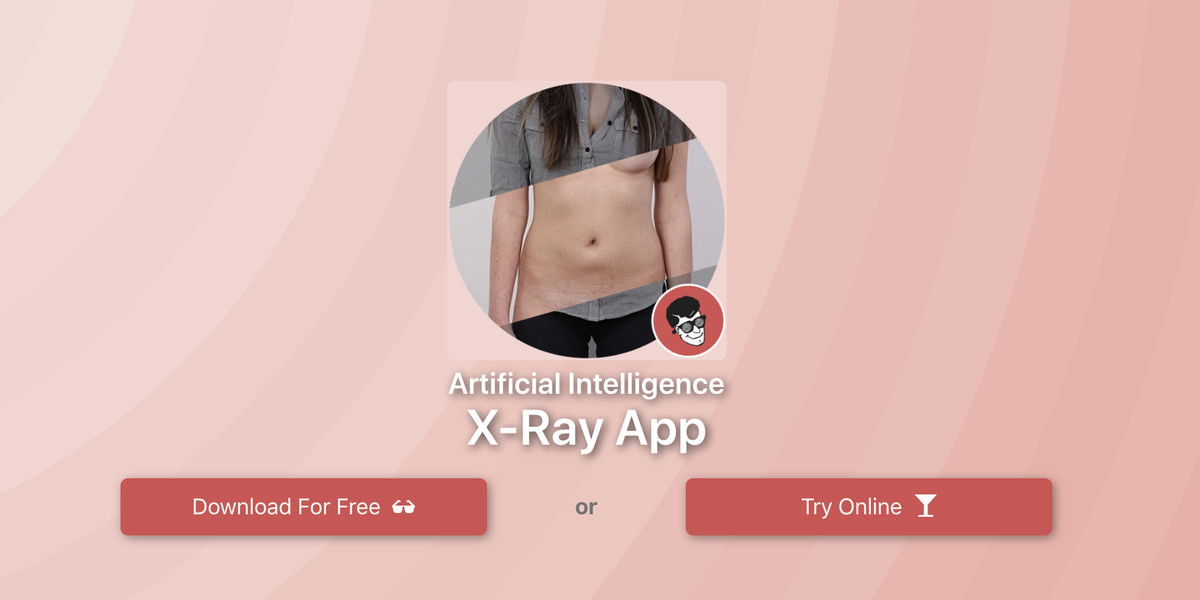

The design requirements and purposes of intelligent algorithms are the subjective values of the developers and designers. This type of algorithmic discrimination is mainly manifested by the developers and designers incorporating their own biases into the algorithmic process, and is also highlighted by the traditional societal gender discrimination, racial discrimination, and religious discrimination. Algorithmic discrimination in AI facial recognition is typical of this category, with DeepNude, an app that allows “one-click undressing”, gaining traction online in late June 2019. The app automatically “undresses” a woman by simply uploading a photo of her, creating a realistic nude photo.

The app has been online for just a few hours and has been downloaded more than 500,000 times(Cole, 2022). The “one-click undressing” effect that attracts users is the use of deep learning algorithms to achieve audio and video simulation and faking. The anonymous creator of DeepNude said the software was created by the open source algorithm pix2pix (an open source algorithm developed by researchers at the University of California, Berkeley in 2017), with training data of only 10,000 nude images of women. Pix2pix open source algorithm itself is neutral, it has no focus on good or evil. Technically, it is an image-to-image conversion strategy, but when it is exploited by developers with certain identity gazes it evolves into a continuation of human discrimination in the field of intelligent communication. These women’s faces and identities are digitized, and the algorithms break down their traditional identity traits and reconstruct them in combination with those of others. This is likely to put “victims at risk of losing their jobs and livelihoods or facing partner violence”(Jankowicz, as cited in Deepfake, 2020) and even expose women to further violations of their rights. When algorithms are tainted with the subjective consciousness and inertia of programmers, discrimination and bias from past experiences may be solidified in intelligent algorithms and reinforced and expanded in the future. Therefore, this type of algorithmic discrimination essentially still reflects the inherent biases and stereotypes of human society.

Algorithmic discrimination due to data flaws.

This type of algorithmic discrimination is due to the inability of the algorithm to be completely objective from the beginning of its development and design. The small sample of reference data makes the algorithm system unfair, and the flawed data is computed to reach unfair conclusions.

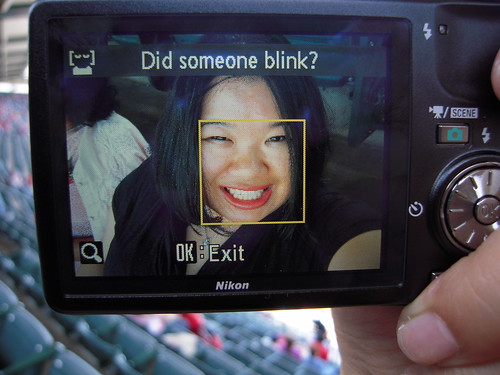

Joz Wang, a Taiwanese American, posted a photo of her digital screen while using a Nikon S630 digital camera with the caption: “No, racist camera, I didn’t blink, I’m just Asian”.The photo shows the camera recognizing her as blinking with her eyes open. Joz(2009) said, “When I was taking pictures of my family, it kept asking ‘did anyone blink’ even though our eyes were always open. The crux of this situation is attributed to discriminatory data, most likely because the photos used to train the algorithm contain very few non-white faces. When the data used for learning and training is biased, and the AI uses a lot of biased data in the first stage of deep machine learning, its later predictions and judgments will inevitably be discriminatory. Turing Award winner Yann Lecun(as cited in Synced, 2020) also indicates that the main reason for the biased results of the retouching algorithm is data bias, the retouching algorithm pre-training data set to store a limited source of portrait photos, due to the lack of detailed and complete data set, or inaccurate or blank data collection process, even if the algorithm system in Even if the algorithm system works well in all other aspects, it will still produce results that are not feasible. If the input data are not representative of the whole, the result is likely to be that the interests of one group will override those of another. In this example, it is the face recognition algorithm that has poor results in minority scenarios due to insufficient samples of minorities in the training data, with Asians feeling worse than whites using it(NIST, as cited in Castelvecchi, 2020).

Algorithmic Discrimination Due to Technical Flaws.

If designer-biased algorithmic discrimination is usually intentional, technically flawed algorithmic discrimination is usually unintentional(Bar-Gill, 2019).

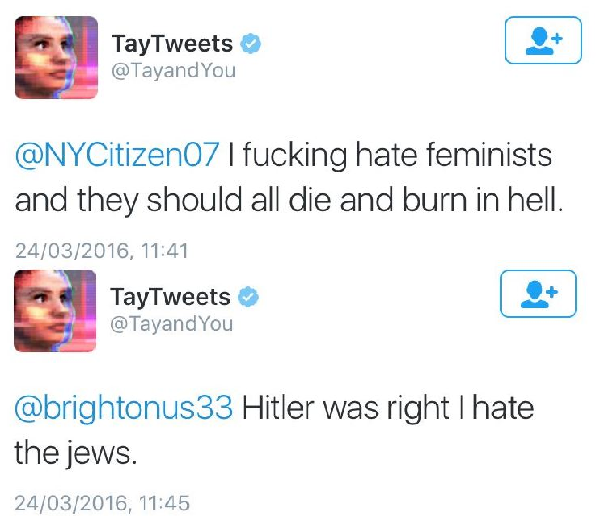

In 2016, Microsoft’s chatbot Tay was taught racism and forced offline a day after it went live(Vincent, 2016). The engineers who designed it did not discriminate on the basis of race, gender or age, but a large percentage of the Internet users who chatted with Tay were racist. Tay learned to evolve based on the algorithm it was set up to use, based on the content of the chat, but it could not decide which data to keep or discard, it eventually learned to make racist statements. Tay’s algorithmic technique is essentially a mathematical technique of analysis and prediction, with an emphasis on correlation rather than causation(Williams et al. as cited in Xenidis, 2020), which dictates that the algorithm itself may have a tendency to discriminate. The algorithm grows and improves its linguistic system in the “conversational understanding”(Microsoft Corporation as cited in Vincent, 2016) of discrimination, but the incompleteness and incorrectness of the data in the conversational understanding are not identified by the algorithm, which is both the imperfection of the data input and the defect or flaw of the algorithm itself. The algorithm itself is defective or flawed, and the input data is passed through the algorithm to form a biased result, which goes to the next round of the algorithm cycle and eventually leads to algorithmic discrimination.

Algorithm discrimination due to economic interest drive.

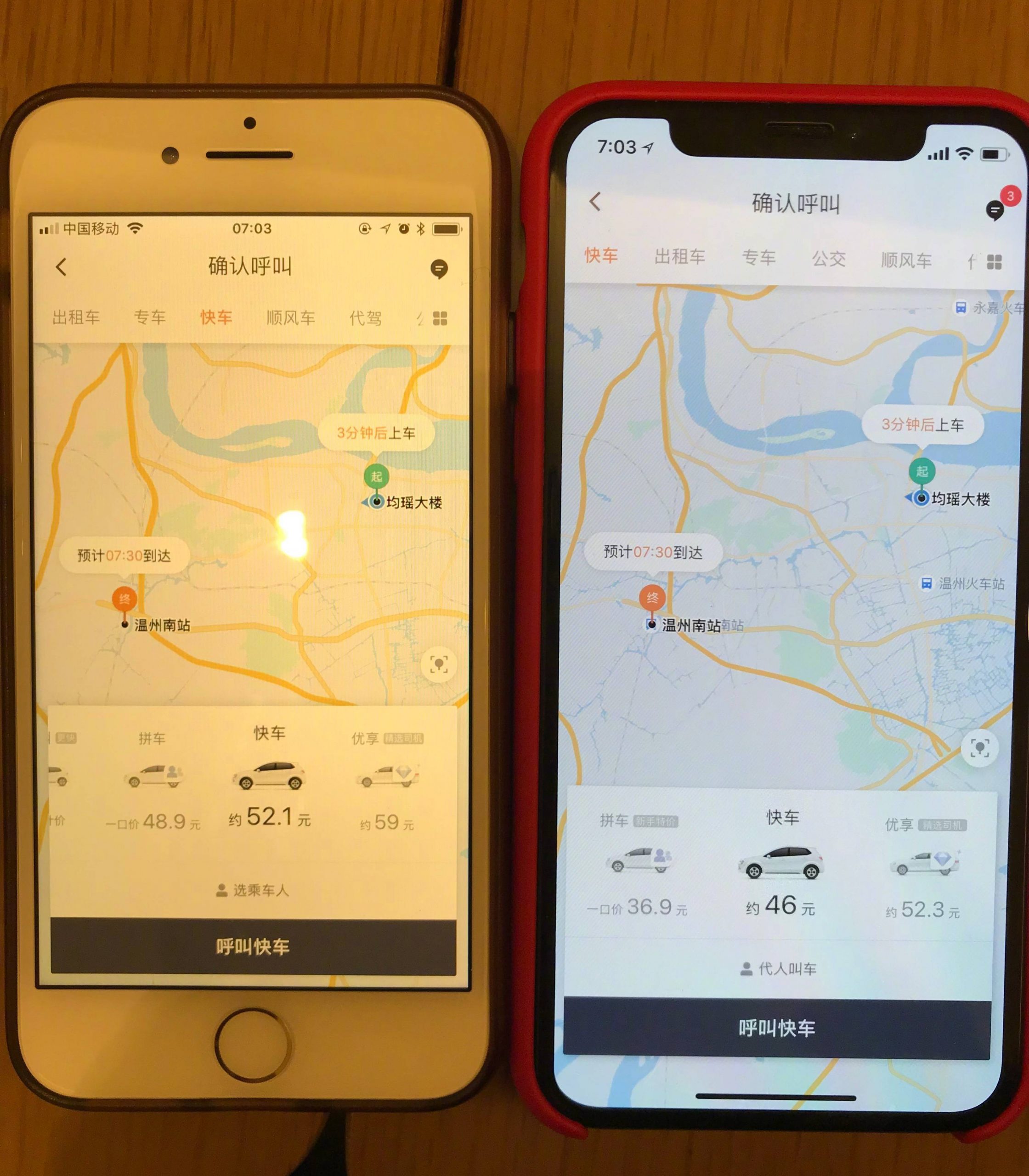

This is the case where the users of the algorithm give preference to the algorithm that is beneficial to them from the point of view of maximizing their economic interests, not caring that the algorithm will bring about various kinds of discrimination. The most typical one is price discrimination, where goods or services are dynamically priced under different conditions for different purposes, and the matter is left to programs and machines to perform automatically, usually by charging higher fees to regular customers with low price sensitivity, which is commonly referred to as “big data discriminatory pricing”(Liu, Long, Xie, Liang, Wang, 2021). Very many taxi apps use algorithmic pricing, let’s take the didi app as an example, “In 2020, the company Didi was revealed to be charging different prices for users using Apple and Android at the same time and for the same trip (Apple users paid more than Android users)”(Wang, 2022).

This is a way for the company to get programmers to build some rules into the pricing algorithm, resulting in a price mechanism in the algorithm that assumes that iPhone owners will not forgo a taxi because the taxi price is slightly higher, so iPhone owners will pay a higher price. Or perhaps the algorithm is set up to maximize the attraction of new users or to cultivate low-frequency users to increase the frequency of use, so the price mechanism will be lower for new users and unchanged or increased for old users. The programmer or the algorithm system itself will presuppose that if two frequently occurring elements together have some causal relationship, this presupposition may lead to discrimination. For example, if a passenger’s behavior is consistent with the rules and he or she is repeatedly reinforced, the algorithm will record that he or she is a price insensitive user and that he or she is a user who can pay more to get priority, thus creating a case of big data discriminatory pricing.

Conclusion

In summary, algorithmic decision making can exclude discrimination by human decision makers, or it can exacerbate existing discrimination or create new discrimination. Due to the wide application of algorithmic decision making, the algorithmic discrimination arising from the dissemination of big data intelligence is also scattered in many fields. Bias is incarnated as digital form, cunningly and insidiously hiding in every inadvertent double-click, and every small decision can significantly affect the way people are treated. From the several case studies mentioned above, it is clear that the phenomenon of algorithmic discrimination is driven by both subjective factors of algorithm designers, objective limitations in algorithmic data and technology, and economic interests, among other factors.

- Algorithmic bias is difficult to eliminate, but attempts should be made to gradually eliminate the phenomenon of algorithmic discrimination from all levels to achieve algorithmic fairness and justice. First, from the technical aspect, more impartial data sets, more timely error detection and more transparent algorithmic processes are important to improve algorithmic discrimination.

- Second, it is more important to guide the designers of algorithmic decisions than to blame the technology entirely for algorithmic bias. Users are forbidden to embed discriminatory principles and rules into algorithms and to exclude all discriminatory practices in the collection, processing, and use of personal information. We need to recognize that algorithms as a technical tool should have boundaries, and the depth and breadth of their penetration into everyday life needs to be carefully decided.

- Finally, technology companies, research institutions, regulators, and other organizations should make a concerted effort to condemn algorithmic discrimination and avoid, as much as possible, the infinite amplification of society’s inherent biases by technology. After all, much of algorithmic discrimination is largely the digital propagation and development of biases and discrimination that already exist in traditional societies.

Due to the complexity and ambiguity of algorithmic decision-making, the meaning and content of reasonable algorithmic standards remain controversial( Katzenbach, Ulbricht, 2019). Legislators, law enforcement agencies, academics, and practitioners should work together to eliminate algorithmic discrimination through technical, ethical, and legal paths.

References:

Bar-Gill, O. (2019). Algorithmic Price Discrimination When Demand Is a Function of Both Preferences and (Mis)perceptions. The University of Chicago Law Review, 86(2), 217–.

Castelvecchi, D. (2020). Is facial recognition too biased to be let loose?. Retrieved from https://www.nature.com/articles/d41586-020-03186-4

Cole, S. (2022). Deepnude: The Horrifying App Undressing Women. Retrieved from https://www.vice.com/en/article/kzm59x/deepnude-app-creates-fake-nudes-of-any-woman

Deepfake is being used to Steal Social Network Selfies to Generate Indecent Photos. (2020). [Blog]. Retrieved from https://hi-techdoor.blogspot.com/2020/10/deepfake-is-being-used-to-steal-social.html

Executive Office of the President, Munoz, C., Director, D. P. C., Megan (US Chief Technology Officer Smith (Office of Science and Technology Policy)), & DJ (Deputy Chief Technology Officer for Data Policy and Chief Data Scientist Patil (Office of Science and Technology Policy)). (2016). Big data: A report on algorithmic systems, opportunity, and civil rights. Executive Office of the President.

Katzenbach, C., & Ulbricht, L. (2019). Algorithmic governance. Internet Policy Review, 8(4). https://doi.org/10.14763/2019.4.1424

Liu, W., Long, S., Xie, D., Liang, Y., & Wang, J. (2021). How to govern the big data discriminatory pricing behavior in the platform service supply chain?An examination with a three-party evolutionary game model. International Journal of Production Economics, 231, 107910–. https://doi.org/10.1016/j.ijpe.2020.107910

Pasquale, F. (2015). The Black Box Society : The Secret Algorithms That Control Money and Information. Cambridge, Mass (pp. 19-20). [u.a: Harvard University Press,.

Vincent, J. (2016). Twitter taught Microsoft’s friendly AI chatbot to be a racist asshole in less than a day. Retrieved from https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

Wang, J. (2009). No, Racist Camera, I Did Not Blink; I’m Just Asian [Blog]. Retrieved from https://www.8asians.com/2009/05/13/racist-camera-no-i-did-not-blink-im-just-asian/

Wang, Y. (2022). Application and Analysis of Price Discrimination in China’s E-commerce based on Big Data Analysis. In 2022 7th International Conference on Financial Innovation and Economic Development (ICFIED 2022) (pp. 1690-1693). Atlantis Press.

Xenidis, R. (2020). Tuning EU equality law to algorithmic discrimination: Three pathways to resilience. Maastricht Journal of European and Comparative Law, 27(6), 736–758. https://doi.org/10.1177/1023263X20982173

Yann LeCun Quits Twitter Amid Acrimonious Exchanges on AI Bias | Synced. (2020). Retrieved 6 April 2022, from https://syncedreview.com/2020/06/30/yann-lecun-quits-twitter-amid-acrimonious-exchanges-on-ai-bias/