The evolution of the digital world and the emergence of social platforms have not come with all roses and glam. Social media presents challenges, and these challenges are detrimental. For this reason, this blog evaluates the prevalence of online hate speech, especially on different online platforms and social media. Of interest is the notable online race hate speech cases in the Australian context that propagates racism and discrimination against minority groups (Matamoros-Fernández, 2017). It is fascinating how the recent rise of digital spaces has revealed a hidden rot within our societies. It is also scary, given the possible implications of the hate speech on the victims. Racism propagation through online hate speech is a dangerous and prevalent trend in Australia through social platforms that manifest in a dynamic form, sometimes camouflaged, lethal and with considerable consequences.

Contextualizing the Online Race Hate Speech

Firstly, it’s imperative to understand that online hate speech comes in different forms. We are in the age of social media and digital connection, so anything is possible. However, race hate speech is a concern in Australian online spaces. For instance, according to Jakubowicz’s (2017) insights, the rapid rise of online race hate speech is so massive that it appears to have overwhelmed the capacity of social institutions responsible for controlling it. We can refer to online hate speech from a broader perspective to include various elements. Racial hate speech can take multiple forms and strategies. These could be the thinly veiled jokes with racial innuendos or direct attacks using hidden, anonymous identities. Specifically, the Australian Human Rights Commission (AHRC) has been categorical about what comprises cyber-racism (Australian Human Rights Commission, n.d.). These can include offensive jokes and comments, verbal abuse, name-calling, intimidation, harassment or public opinion to evoke hostility towards certain ethnic groups. This is an issue that is amazingly broad, complex and dynamic.

Similarly, racism on social platforms is a problem that seems to grow deeper roots in multicultural societies like Australia. Being a victim doesn’t take much effort. All you need to do is be online in the same digital space dominated by the perpetrators. According to Matamoros-Fernández and Farkas (2021), socio-political landscapes have broadened on the social media platform, giving rise to new and old racist routines. Racism on social media plays out in an ugly way. In Australia, numerous cases targeted at racial minorities have been common. On the other side, we also need to reflect on the role of social media organizations, or rather the companies in charge of controlling the social media platforms. The thing is, the underlying social media algorithms on various social networking sites like Facebook, Twitter and Instagram, among others, are developed to facilitate online racism while the owning corporations profit from it (Jakubowicz, 2018). While these claims may seem subjective, they’re also founded on existing trends and evidence.

Essentially, recent findings on Australia’s hate speech impacts reveal a problem rooted firmly in the society. From Analysis & Policy Observatory (APO) findings on online hate speech in Australia in 2019, about 14% of adults between 18 and 65 were targets of online hate speech, 36% took some action, 64% made no attempts to report it, while 58% reported negative impacts (Analysis & Policy Observatory, 2020). Based on APO findings, we must note that online racism accounts for a large percentage of these cases, accounting for over 30% of overall online hate speech cases. The implications of racial hate speech can be detrimental. Apart from the emotional distress that the victims experience, violence against them can also occur. However, the dynamic form of online racial acts and cyber-racism presents a fascinating scenario. Again, in Australia, the perpetrators of online racial hate speech have adopted anonymity. This state means that people can create fake online profiles and identities and utilize these digital spaces to spew racial hate and misinformation against specific ethnicities.

Reviewing the Case Study of ‘Woden the Bogan’

A better way of illustrating the dynamism of racial hate speech and racism on social platforms in Australia is through the Woden the Bogan case. You might wonder what the name stands for and its connection to cyber-racism. Well, ‘Woden the Bogan’ is just a pseudo name created online to represent racist Jason Murtagh anonymously. Recently, the Perth nightclub manager Murtagh became the first individual in Australia to be convicted of publishing hateful information to ‘incite racial hatred’ against minority ethnic groups in the country with a fake online identity (Styles & Pilat, 2022). After losing his job during the Covid-19 pandemic lockdown, Murtagh spent an extended period from April 24 to August 17, 2020, sharing racially inflammatory memes to a private community group called ‘Politically Incorrect.” Another critical detail is that the perpetrator used Gab.com, a proclaimed “free speech” social media platform. According to Styles and Pilat (2022), Gab tries to mimic Facebook, but it doesn’t have any rules to block hate speech sentiments or retract offensive posts.

Picture showing Woden the Bogan profile.

Image: Styles and Pilat, WAtoday, All rights reserved

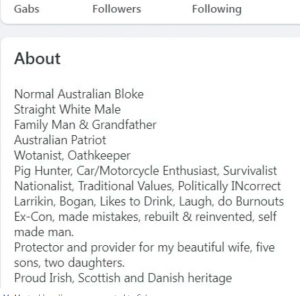

Pictures showing a section of the fake Woden the Bogan profile bio on Gab.

Image: Styles and Pilat, WAtoday, All rights reserved

The Woden the Bogan case study reveals fundamental underlying issues with online racial hate speech and racism on social platforms in Australia. Primarily, this case shows how the perpetrators are ready to hide their identities to advance their deeds. Even though not all cases involve hidden identities (Matamoros-Fernández & Farkas, 2021), this case study reveals a dangerous trend that negatively affects society. It is a perfect example of how cyber-racism can be sometimes camouflaged and lethal. For instance, using the fake Woden the Bogan profile, Jason Murtagh posed as a white supremacist, threatening vile actions against the minority groups like the indigenous people, Islamic people, Asians and African Americans (Styles & Pilat, 2022). Murtagh used threatening sentiments like running his truck over the people or setting his dogs on them. From the profile, it was apparent that the profile was meant to send a message. A “get out” message and a bio highlighting supremacist innuendos indicated his position and determination to advance cyber-racism. Overall, this case study reveals the volatile nature of social media and its dangers. For instance, aspects like the possibility to create fake social media profiles are concerning and raise questions over the input of social media organizations, as witnessed in this case study. Social media organizations have a role to play, but questions remain over their commitment and the effectiveness of their methods. There is adequate evidence from the prevalent cases that social media entities have not put in enough effort to address the situation. The leading social media corporations have come under immense pressure lately. Nevertheless, it is in the best interest of every concerned person to understand the position and the role of social media in the war against online racial hate speech and racism on social platforms.

The Social Media Role in Platform Racism and Solutions

As highlighted in the ‘Woden the Bogan’ case study, social media platforms can directly contribute to the spread of racial hate speech and racism on social platforms. For instance, we can see that Gab.com is a type of social media platform that tolerates inciting remarks and is considered a haven for right-wing extremists (Styles & Pilat, 2022). With an estimated four million users, the site can be a perfect platform for spreading hate speech with little or no regulations. Another scary thing is that Gab is just a lesser player in the social networking sites league. Let’s, for instance, take Facebook and Twitter, two major social media giants. They are broad and extensive, with regulations and moderators; however, hate speech still finds its way into them (Jakubowicz, 2017). In the modern digital world, hate speech has become slippery. Policymakers do not know where to focus, and there are too many loopholes that perpetrators have developed to bypass regulations. Thus, we’re left with people exposed to online racists, racial hate speech, and cyber-racism.

Indeed, it’s bold and true that social media organizations have failed their users in protecting them against online hate speech and cyber-racism. Numerous cases that have happened before, like the ‘Woden the Bogan’ case study, could have been controlled. While low-profile social platforms like Gab can be downplayed for their relative size and motive, leading players like Facebook and Twitter have even been worse in exerting regulations against racial hate speech and racism on their social platforms. We can take an example of Facebook’s reluctance to remove hate speech content in the Australian context (Taylor, 2021). Under Australia’s Discrimination Act, the company has been accused of failing to moderate hate speech by allowing the explicitly anti-Islam pages to run. These accusations are just an example of the existing leniency on social media platforms. Various high profile cases, including the racial abuse and online attacks directed at Adam Goodes, an indigenous athlete, were carried out through popular sites like Facebook and Twitter (Matamoros-Fernández, 2017). Facebook has also come under sharp scrutiny in Australia for its lack of action in banning racist pages against Aboriginal people. The perpetrators of online racial hate speech and cyber-racism have adequate loopholes that they can exploit because of social media organizations’ negligence.

Therefore, the future solution to online racial hate speech and cyber-racism lies not only in the single actions of social media companies but in a collective approach. Its widespread nature means that policymakers and regulatory bodies have been overwhelmed (Jakubowicz, 2018). Similarly, trusting the social media corporations alone to end this menace is hopeless as they are part of the same problem. Thus, a collaborative approach between government authorities and the social media platform companies is a reasonable choice. The ‘Woden the Bogan’ case study has highlighted that commitment toward tracking and exposing racial hate speech perpetrators can yield positive results. For instance, an open operation that took months by the West Australian police nabbed Jason Murtagh as the real person behind the ‘Woden the Bogan’ character (Styles & Pilat, 2022). This means that all it takes is the commitment to exposing the perpetrators. On the one hand, the government needs to start holding social media platforms responsible, including imposing hefty fines on platforms that do not impose stricter measures to curb online race hate speech and cyber-racism. The corporations owning social media platforms, including Meta Platforms, Inc., Twitter, and Alphabet, need to step up efforts in the war against cyber-racism in Australia and globally. We also understand that this is not an Australian problem alone but a global issue. However, the Australian authorities could set an excellent example through practical measures and commitment, as seen in the highlighted case study.

Conclusion

Online racial hate speech and racism on social platforms is an actual menace that many people face globally. Multicultural societies like Australia face stiff challenges with the rising cases of cyber-racism as the world evolves. The ‘Woden the Bogan’ case study underlines how dynamic cyber-racism is in a real sense. It can come camouflaged and hidden, but committed efforts by security authorities can lead to the exposure of perpetrators. Finally, we have also seen that it is high time the social media corporations and parent companies started owning up to their role in online racial hate speech or face enormous repercussions from the government authorities. The bottom line is it would take a collective approach to achieve online sanity on social media platforms.

Reference List

Analysis & Policy Observatory. (2020). Online hate speech: findings from Australia, New Zealand and Europe. Analysis & Policy Observatory. Retrieved from https://apo.org.au/node/276421

Australian Human Rights Commission. (n.d.). What is Cyber-Racism? Retrieved from Australian Human Rights Commission: https://humanrights.gov.au/our-work/race-discrimination/cyber-racism

Jakubowicz, A. (2017). Alt_Right White Lite: trolling, hate speech and cyber racism on social media. Cosmopolitan Civil Societies: an Interdisciplinary Journal, 9(3), 41-60. http://doi.org/10.5130/ccs.v9i3.5655

Jakubowicz, A. (2018). Algorithms of hate: How the Internet facilitates the spread of racism and how public policy might help stem the impact. Journal and Proceedings of the Royal Society of New South Wales, 151(467/468), 69-81. https://search.informit.org/doi/10.3316/informit.790571095083969

Matamoros-Fernández, A. (2017). Platformed racism: the mediation and circulation of an Australian race-based controversy on Twitter, Facebook and YouTube. Information, Communication & Society, 20(6), 930–946. https://doi.org/10.1080/1369118X.2017.1293130

Matamoros-Fernández, A., & Farkas, J. (2021). Racism, hate speech, and social media: A systematic review and critique. Television & New Media, 22(2), 205-224. https://doi.org/10.1177/1527476420982230

Styles, A., & Pilat, L. (2022). ‘Woden the Bogan’ tests Australian power to police online racism. WAtoday. Retrieved from https://www.watoday.com.au/national/western-australia/woden-the-bogan-tests-australian-power-to-police-online-racism-20211108-p5973r.html

Taylor, J. (2021). Facebook accused of not removing hate speech in complaint under Australia’s racial discrimination laws. The Guardian. Retrieved from https://www.theguardian.com/australia-news/2021/apr/22/facebook-accused-of-not-removing-hate-speech-in-complaint-under-australias-racial-discrimination-laws