Analyzing the Formation and Solutions of Filter Bubbles in Social Networks

Introduction

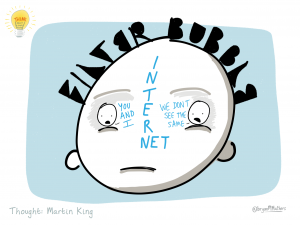

Over the past decade, people have been using the Internet more and more frequently, and the era of big data has allowed every user and event to become visible and countable (Flew, 2020). At the same time, social networks have connected people around the world, and users share their preferences on the Internet, which are recorded by algorithms. However, when people are immersed in social networks for long periods, they are highly susceptible to online discourse. Internet activist Eli Pariser (1980) mentioned that computers calculate and infer users’ preferences from their history on the Web to customize personalized information. It is worth noting that this personalized information further reinforces individual characteristics and, in this cycle, will make each individual live in a closed bubble, isolated from the outside world (Bali, 2021).

Although algorithmic recommendation technologies can solve the problem of information flooding for users, this will gradually isolate them in their information bubbles, which are filter bubbles. The main reason for the formation of filter bubbles cannot be separated from the platform and users, which means being surrounded by information of their interest can make users addicted to the software, and more loyal users can make more money for the platform. However, with the proliferation of filter bubbles, the polarization of user groups has become an issue that requires social attention. Personalization filters leave users at the mercy of their own devices, and social networks provide a kind of invisible automatic propaganda that indoctrinates users with their ideas. As a result, this can quickly push users into a world where the network shows them the information it thinks users want to see, but not necessarily what they need to see (Andrejevic, 2019). When users are immersed in a single message, they blindly believe their own opinions, automatically ignore communication with others, and become biased and polarized in online debates.

This blog will take Tiktok (short video platform), Red (social media platform), and TouTiao (news app) as examples to analyze the performance and impact of filter bubbles in social networks and possible solutions to alleviate them. Mark Andrejevic refers to the debate about solutions, including effective regulation of content by platforms and increased media literacy among users (Andrejevic, 2019). In addition, I will discuss how social media in China has made changes to alleviate the filtering bubble.

Tiktok

There is a typical case to prove that the filter bubbles of social networks can polarize users and negatively affect the online environment. In April 2022, the hot topic called #Iacceptedmyno-makeupface appeared on Tiktok, where many people showed how they looked without makeup and refused to look anxious. The topic has reached 1.62 billion plays on Tiktok and 180 million reads on Weibo. One girl also posted a video without makeup, but users discovered that she wore delicate makeup. The video then had over 10,000 comments and over a million likes. Some people cyber-violated the girl, accusing her of hypocrisy and being a “liar.” Others commented that she was a beautiful girl who didn’t deserve to be treated this way. Eventually, the girl deleted the video because she couldn’t stand the online violence, causing serious psychological damage to her. In fact, Tiktok determines users’ preferences based on their views, likes, comments, and retweets. Therefore, once a user likes and comments on a video or topic, Tiktok will keep pushing similar information content. When the platform recommends content that users are not interested in, they can long-press the screen and select the “Not interested” button, and the platform will not recommend similar content to them. Thus, in the #Iacceptedmyno-makeupface, users who posted hate speech kept getting videos with the same viewpoint. In contrast, users who posted positive speech kept getting videos criticizing people who were violent online. Ultimately, these people debate and troll the girl’s video comments, creating a negative experience for the girl and the unknowing user.

In this case, Tiktok as a social platform did not play a monitoring role to some extent. Instead, Tiktok used an invisible hand to put users with different interests into different filter bubbles, leaving users without a complete picture of the information. When a user who posts extreme comments is immersed in a bubble of negative information, the girl has been symbolized and turned into a symbol of “hypocrisy” rather than a living, breathing human being. People are more likely to be immersed in their world in the filter bubble, creating audience polarization. The problem of polarization should be paid more attention to, especially in the short video-based platform. Short video platforms set up autoplay to enhance user stickiness and prolong users’ usage time. The next video with similar content or theme will be played automatically after one video is finished. Hence, the personalized recommendation does not save people from excessive information. In contrast, it may trap users in a vicious circle: the more information they are exposed to, the more they need personalized recommendations; the more they rely on personalized recommendations, the more similar information they receive.

In fact, algorithms on social networks have the predictive power to infer what topics users are likely to be interested in based on their behavior. Still, social platforms seem to have a habit of distributing vertical content to users. Studies have found that social media algorithms prioritize polarizing, controversial, fake, and extremist content. TouTiao is a typical example.

TouTiao

In addition, another example can illustrate how the government and platforms can help a lot to alleviate the filter bubble of social networks.TouTiao, a famous news platform in China, was warned by the government for spreading false ads, rumors, fake news, and pornographic content in 2018. Users became polarized in the filter bubble of negative information; in other words, users in the rumor bubble were terrified, those in the false advertisement bubble were extremely angry, and those in the pornography bubble were highly excited. Then, the government’s online regulator punished TouTiao by requiring the platform to monitor the content distributed to users. Filter bubbles divide cyberspace into small worlds, making it no longer possible for the Internet to become a public sphere. The public sphere is, in principle, open to all, where people are free to express and publicize their opinions on issues of general interest. However, as the slogan of TouTiao, “What you care about is the headline.” The algorithm’s recommendations are based on personal preferences rather than on society’s public interest, so it may make users focus only on their world and lack the necessary attention to social and public affairs. Public affairs lack the necessary attention. Research has also demonstrated that personalized recommendations can contribute to selective exposure of users (Andrejevic, 2019). The filtering mechanism of personalized recommendations makes people feel good about themselves and treat their narrow self-interest as everything. In addition, people should be exposed to any information without being pre-screened, and most citizens should have some degree of common experience. The filtering model of personalized recommendations separates people into small worlds of individual choices. The communication system’s unlimited filtering power granted to individuals will lead to extreme fragmentation. Moreover, filtering algorithms also limit social interaction, making it difficult for general topics to be adequately discussed and social consensus to be formed, not helping to make decisions on public events and solve social problems. Platforms need interdisciplinary collaboration to improve personalized recommendation algorithms and break filter bubbles (Bali, 2021).

Platforms need interdisciplinary collaboration to improve personalized recommendation algorithms and break filter bubbles. TouTiao announced in January 2018 that it was hiring 2,000 content reviewers, changing from an algorithm-led to a human-machine combination. In addition, TouTiao invited a team of experts from media, academics, and public officials to participate in the supervision of the platform’s content and services. Toutiao has launched China’s first artificial intelligence anti-cheesy mini-program, “Spirit dog”, which not only provides users with quality information, but also provides them with more comprehensive information and eases the filtering bubble. The founder of TouTiao, Zhang Yiming, said “technology must be full of responsibility and goodwill. “He divides corporate responsibility into three areas: platform governance, technology innovation, and content services.

In January 2018, TouTiao made its algorithm logic public for the first time: the content is recommended to users based on different types of features, and the environmental elements are based on the differences in users’ information preferences in different scenarios, while user features include occupation, age, gender, interest labels, etc. TouTiao’s algorithm combines these three aspects to make predictions, i.e., to speculate whether the recommended content is appropriate for a particular user in a specific scenario. The algorithm of TouTiao combines these three aspects to make predictions, i.e., to speculate whether the recommended content is suitable for a particular user for a specific scenario. It is worth mentioning that the inner logic of this algorithm is all aimed at catering to users’ needs. In addition, four typical features play an essential role in recommendation: hotness features, relevance features, contextual features, and synergistic features. Only synergistic elements analyze the similarity between different users through user behavior and achieve value diversity through “interest search” and “generalization.” The primary purpose of TouTiao’s changes is to help solve the problem of narrower algorithmic recommendations to a certain extent.

The filter bubble is not only the algorithm technology but also the user’s independent choice. For example, on the homepage of the social platform Red, when users long-press on a single piece of content, “Disinterested” and “Content Feedback” appear on the screen. In addition, the user can select a specific reason from the “Not Interested” button: “Don’t like the content” or “Don’t like the creator.” Users can also select “Ads,” “Repeated recommendations,” “Pornography and vulgarity,” and “Uncomfortable content” in the “Content feedback” button. “and “uncomfortable content.” However, suppose people find themselves exposed to more comprehensive information. In that case, they are unwilling to consider the views of the broader community and the unknown others who make up the community, and the filtering bubble of the social network will persist. To mitigate the filter bubble, the user community needs to be aware of the need to break the bubble and realize that polarization is the wrong direction for online interaction.

Conclusion

With the development of online technology, people are becoming more and more dependent on the Internet, through which they obtain information and express their opinions. Algorithms have, to some extent, allowed users to see the content of interest more quickly, while it has also created filter bubbles. The Internet was once thought of as the place that brought everyone together and opened up all information to the world, but now the Internet draws people into their corners. Social media companies encourage users to prioritize views similar to their own to increase advertising revenue. However, this model of content distribution by platforms is seen to mainstream polarized views. While users would like to see diverse content on social networks, polarized speech such as nasty comments and online violence is not what users want.

By analyzing Tiktok and TouTiao, we can conclude that filter bubbles result from a combination of algorithms, users, and social structures, which means that mitigating filter bubbles requires a concerted effort from all three components. Therefore, the government should strengthen the management of the Internet; platforms need to improve user insight techniques, try to recommend cross-disciplinary content to users, and avoid polarized online debates; and users need to take the initiative to break the bubble, which will make the online community better.

References

- Andrejevic, Mark (2019), ‘Automated Culture’, in Automated Media. London: Routledge, pp. 44-72.

- Bail, C. (2021). Breaking the Social Media Prism: How to Make Our Platforms Less Polarizing. Princeton: Princeton University Press.

- Brent Kitchens, Steven L Johnson, & Peter Gray. (2020). Understanding Echo Chambers and Filter Bubbles: The Impact of Social Media on Diversification and Partisan Shifts in News Consumption. MIS Quarterly, 44(4), 1619–. https://doi.org/10.25300/MISQ/2020/16371

- Chitra, U., & Musco, C. (2020). Analyzing the Impact of Filter Bubbles on Social Network Polarization. Proceedings of the 13th International Conference on Web Search and Data Mining, 115–123. ACM. https://doi.org/10.1145/3336191.3371825

- Flew, T. (2021). Regulating platforms. Cambridge: Polity, pp. 79-86.

- Geschke, D., Lorenz, J., & Holtz, P. (2019). The triple‐filter bubble: Using agent‐based modelling to test a meta‐theoretical framework for the emergence of filter bubbles and echo chambers. British Journal of Social Psychology, 58(1), 129–149. https://doi.org/10.1111/bjso.12286

- Haim, M., Graefe, A., & Brosius, H.-B. (2018). Burst of the Filter Bubble?: Effects of personalization on the diversity of Google News. Digital Journalism, 6(3), 330–343. https://doi.org/10.1080/21670811.2017.1338145

- https://cn.chinadaily.com.cn/a/202012/24/WS5fe42569a3101e7ce973729e.html