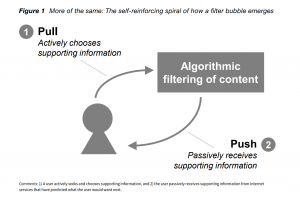

The increasing amount of information found online has led to the need to filter content, therefore, search engines such as Google have created algorithms that help users find the information they desire without accessing unnecessary content. However, it has been argued that such personalization algorithms provide a user with narrow perspectives on various types of news and opinions which places them in a bubble hence the term, filter bubble!

The self-reinforcing spiral of how a filter bubble emerges (Dahlgren 2021)

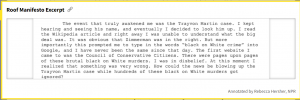

These bubbles can play a major factor in enhancing far-right extremist ideology which has been quite common in the United States and can lead to further polarised opinions amongst people. The latter can be seen through the case study of Dylann Roof; the perpetrator of the Charleston Church shootings whose radical ideas developed through a simple google search of the words ‘black on white crime.’

Filter Bubbles, Good or bad?

Google’s search algorithm runs through a user’s browsing history, clicked ads, and viewed content from its properties such as YouTube, and provides a personalised recommendation to users on content based on a feedback loop. The latter presented Charleston Church shooter Dylann Roof with propaganda and misinformation which was published by a group with a history of racist messaging and referred to black people as a retrograde species of humanity.

Babel re-enforces the importance of filter bubbles by saying that

“Individuals must have access to some mechanism that sifts through the universe of

- information

- knowledge

- cultural moves

to whittle them down into manageable and usable scope.”

However, such an assumption has been challenged by numerous political figures including Obama, who stated that

Although information bubbles are present in the offline world, the virtual context enables the user reactions to be magnified. Filter bubbles are beneficial in narrowing down searches for a university student doing assignments, however, on other occasions, such specific and tailored content narrows one’s view and deprives a person of the additional information which algorithms have tagged as unwanted.

The Google filter and confirmation bias, a tool for radicalization

The case of Dylann Roof can be analysed through the theory of confirmation bias. Because Google’s algorithm fails to protect the user from misinformation, it can be said that Roof became entrapped in the filter bubble, where his beliefs on white supremacy and the ideology of far-right extremism were strengthened through viewing more content on topics that were recommended to him through Google’s personalization algorithm.

Additionally, when Roof mentioned in his manifesto that he researched deeper,

this referred to sites such as The Daily Stormer and Stormfront which were riddled with misinformation and white supremacy ideologies.

Continuous feeding on such information leads to attachment, and less willingness to let go of the idea. The theory of confirmation bias is important to understand that a myriad of people do not want their beliefs to be contested.

“Each link, every time you click on something, it’s basically telling the algorithm that this is what you believe in”. (Collins 2017)

Even if people are presented with opposing information, having confirmation bias leads to a dispute of such ideas and further strengthens one’s current position on an ideology or opinion. This leads to the formulation of a polarized perspective on the matter which eventually leads to the possibility of the manifestation of extremist thoughts such as in the case of Dylan Roof.

Nonetheless, an example of another young teenager who was radicalized through YouTube can be seen through Caleb Cain who due to confirmation bias, became heavily involved in far-right extremism. Similarly to Dylan Roof, in Cain’s case, YouTube presented numerous videos which aligned with his view, and this made him more vulnerable to a one-sided perspective with no further exploration of additional information.

YouTube is known as a powerhouse of political radicalisation due to its algorithm which suggests fringe content and the platform has a massive audience of at least 2 billion users daily whereas a 2018 Pew study found that at least 85% of teenagers used YouTube making it the highest used platform. Presented with an opportunity for radicalization, far-right extremists have been able to exploit the algorithm on the platform and upload their videos in the recommendation sections of less extreme videos.

“The goal of the algorithm is really to keep you in line the longest.”

— former YouTube engineer Guillaume Chaslot

Moreover, since the algorithms for the filter bubbles work behind the scenes, both Roof and Cain were oblivious to the fact that they were in a filter bubble. A continuation of searching and intaking information in this manner could lead to an increase of confirmation bias over time, which means that the content accessed by a user would most likely generate even more related content in the future hence, further amplifying the bias.

(Echo) Chamber of Secrets and Construction of Reality

The amplification of such bias is also possible when users who are stuck in filter bubbles relate with others who have developed the same views or opinions through online platforms. When two or more agents have similar opinions, their views are not only re-enforced together, but they provide each other with new arguments that support their views. This is evidence of an echo chamber.

Although echo chambers result when an online user self-selects the websites from which a person gets the news, and a filter bubble results when instead an algorithm makes those selections for the user, they are similar in the context that they both expose internet users to ideas, people, facts, or news that adhere to or are consistent with a particular political or social ideology. Additionally, filter bubbles can create echo chambers, hence, allowing individuals the opportunity to be part of a community.

Homophylic theory (Bruns 2019) identifies that people have an intense desire to be around those who are similar and re-enforce their world views. In examining this behaviour online, people have had tendencies to form groups based on similar opinions and ideologies such as in Google+ circles and Facebook groups. Nonetheless, even if members do not meet with each other, beliefs are still intensified, as in the case of online content, users are driven to selectively access content that supports their own political views.

Therefore, it can be said that online platforms provide the opportunity for homophilic interactions to further flourish through the help of personalisation algorithms that entrap people in filter bubbles. Additionally, the creation of individuals and groups with the same ideology and beliefs online can be said to be a result of Algorithmic reality construction. Through this concept, beliefs that have been fed to people through personalisation algorithms have played a part in creating, or further enhancing online societies such as far-right and left-wing extremists. The beliefs within such groups are solidified through numbers and re-enforce the confidence to ignore opinions from the opposite side.

In cases such as that of Dylan Roof, Google’s personalization algorithms provided an option for him, and for other people who were, and are emotionally vulnerable or are looking for a new sense of purpose. It is these filter bubbles and echo chambers that provide users with a sense of purpose or belonging. However, the type of content that has been recommended through Google’s feedback loop re-emphasizes the argument that this is a typical case of algorithmic reality construction in which online platforms such as google have further manifested the construct of the angry white male who has status anxiety about the possibility of their declining social power.

This shows evidence of how personalised algorithms have a stronghold of not just divided opinions online, but this influence extends to social and political situations offline, hence, causing further polarization.

Online Platforms – 1, Humans – 0, Can we take back control?

Recent debates about filter bubbles have also highlighted certain ethical issues, with one of them being the agency that algorithmic data has over human users, which portrays a technologically deterministic understanding of filter bubbles. Through the concept of technological determinism, technology plays an important role in people’s lives as it has the ability to shape society including relationships and opinions; political or otherwise, but human agency is put aside as consumption of online content is monitored by the powerful algorithms that run the online platform.

The quantity of information available on the internet makes filtering inevitable, however, reliance on online platforms to control what a user sees without insight into the filter bubble process would decrease a user’s autonomy. The value of autonomy, therefore, implies that there needs to be more control and influence by users over the filtering process for users to align it to their personal preferences. An increase in the degree of autonomy due to the wider availability of information can therefore only be fulfilled when there is proper filtering in place. And even though one cannot filter the large amounts of internet themselves, there is importance in having the autonomy to be able to assess and influence the mechanisms within the platforms that are engaged in the filtering process.

On that note, the filter bubble transparency act in the US was drafted in 2020 with the purpose of making it easier for online platform users to understand the potential manipulation that exists with hidden online algorithms. The aim of the bill is primarily to allow people to have the choice to opt-out of data-driven algorithms. For example, it seeks to make large companies like Meta or Google notify a user if they are showing content that is based on personal information that was not explicitly provided. The latter could include any information from within search history or a user’s location. The option to turn off this personalization will also be provided. The only data that can be used by the social media platform, however, is that which is provided, such as through search items and saved preferences.

The proposals of such bills show progress, however, still provide a dilemma as allowing users to have such complete autonomy over search engines could hinge on intelligence efforts in cyber-security agendas such as intercepting terrorist attacks by the far-right or by international affiliates such as Al-Qaeda or ISIS. In any case, it is important to proceed toward creating a policy that strikes a balance between providing autonomy to online platform users while holding online platforms and government agencies strictly accountable for any potential misconduct regarding surveillance and obtaining of the data.

So what do we make of these bubbles?

Overall, it can be said that the personalization algorithms used by platforms such as google can trap people in a bubble where their online content feed is personalized based on what the algorithms think they want to see. Such mechanisms have the power to influence people’s choices on what they perceive as right and reject opinions from outside their bubble, hence socially constructing the desires and interests of people, especially those who are young and in a vulnerable position; leading to polarized opinions within society online and offline, and fuelling more radical or extremist thoughts in people as seen through the case of Dylann Roof.