Introduction

In the era of rapid development of web 2.0 technology, the Internet has occupied a large amount of time in users’ daily lives, and the influence and coverage of social platforms on users are huge. Many social platforms of different types and sizes have corresponding audiences on the Internet, and they can express their opinions freely on the platforms. But it could be politically sensitive, racist, violent, hateful, or uncomfortable and bloody. These “spam messages” on online social platforms have become a problem for many users. For example, parents are concerned about their children’s exposure to pornography, gore, and violence. Aboriginal people are concerned about seeing race-related topics. With the development of network technology, the moderation of platform content is extended here. Content Moderation means that the platform system screens and monitors the content posted by platform users according to specific guidelines, models, ethics, and laws, so as to determine that the posted content is in line with the platform’s requirements, that is, the content is required to be not illegal and appropriate. Now, content moderation has become a technology that is highly valued by social media platforms, economic exchange platforms, emotional platforms, community forums, and other similar online platforms. However, due to technical limitations and different expressions, some unfriendly content evaded moderation through humorous expressions and was published on the platform, causing trouble for other users.

This blog will introduce the work done by Facebook and TikTok in content moderation which is currently the world’s more well-known and user-friendly social platform and will critically analyze and interpret them. Then the blog will introduce the government’s efforts in reviewing the content of the platform, through the implementation of new laws, to promote the platform to provide services to the masses in a more standardized, reasonable, and safe manner.

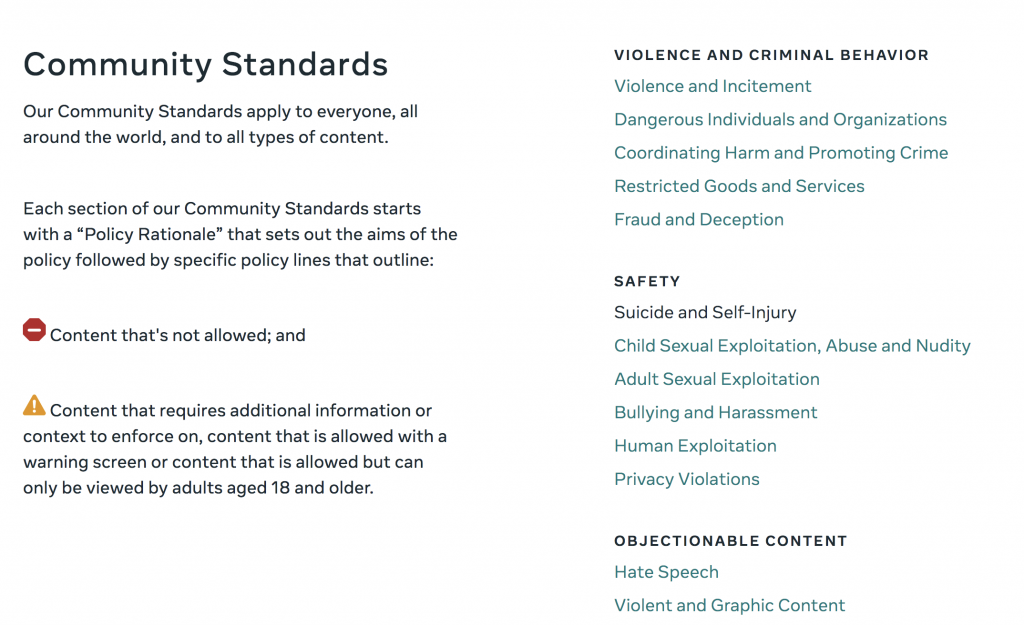

Facebook is the world’s most well-known social media giant company, with a large user base, and its influence and dissemination power on society is huge. The platform also makes it clear on its webpage that the company attaches great importance to Facebook’s ability to provide users with a smooth and secure communication environment, and it also shows that the company attaches great importance to preventing inappropriate content from appearing on the platform. Facebook also has a community code that defines what kinds of content are allowed and prohibited and provides a reference interface. Such as violence and criminal behavior, dangerous individuals and organizations, coordinating and facilitating crime, restricted goods, and services, fraud, and deception. These are all disallowed and prohibited content and the platform has the right to delete or block it after review.

In September 2021, Frances Haugen, a former employee of the company, accused Facebook of under-resourced security teams. “Facebook has been reluctant to accept even the slightest sacrifice for security,” she noted. At the same time, she also mentioned that Instagram is more dangerous than other forms of social media. Haugen (2021) mentioned in his testimony before Congress that Facebook has not even been able to monitor the different languages that users use on the platform, including British English. The company’s security system is dominated by American English, and there is a risk that the platform security system will not be properly implemented in the United Kingdom. Facebook’s ad charges are fueling the growth of hate on the platform. It is much cheaper to run angry, hateful, and divisive ads within platforms than to run compassionate and friendly ads (Haugen, 2021). According to Haugen’s allegations, we can also find that the giant social media company is conscious of the harm to its users and the use of its social platform by individuals or groups to incite violence around the world, but it does not pass its security system. for effective content moderation and user protection. Ariadna, M (2017) also mentioned that although the technical facilities and policies of the platform are increasingly responding to the needs of the platform to manage public opinion, the platform is not responsible for the content posted by the user due to the protection of the regulations of the US Communications Decency Act. But the Facebook platform’s rules for hate speech are unclear and vaguely defined, and the system is difficult to moderate. This ultimately leads to the platform’s lax and irresponsible moderation of content, which will cause harm to some users.

Back in 2012, Facebook was also heavily criticized for refusing to ban racist pages targeting Aboriginal Australians. Facebook initially determined that the content did not violate its platform’s code of conduct and terms of service, but the Australian Communications and Media Authority stepped in to block the pages in Australia (Oboler, 2013). However, offensive content can still be found on Facebook in other countries. The two Facebook-related cases that were appealed were both caused by the platform’s ineffective review of content and its failure to strictly follow its own rules for external publicity. This shows that the company lacks review and supervision of the content posted by users.

However, there is also a report on Facebook’s content moderation. West, S (2017) mentioned in the article that in 2007, there was a large-scale protest against the Facebook platform. The reason is that a mother named Kelli Roman posted a set of photos on Facebook of herself breastfeeding her newborn daughter. Soon, the platform deleted the set of photos posted by the user. The reason was given by the platform’s spokesman Barry Schnitt (2008) because it “completely exposed his breasts, showing his nipples and areolas”, which violated the platform’s related terms (pornographic and filthy). But Schnitt (2008) also said the site will not remove most breastfeeding photos that follow the platform’s terms. This is a self-contradictory statement. Kelli Roman believes that the breastfeeding she posted is not pornographic and filthy and then created a discussion group to protest and petition Facebook, which attracted many mothers who had the same experience as her. The activity had more than 80,000 users and more than 10,000 comments at its peak. Kelli Roman’s experience shows how ambiguous and contradictory Facebook’s content moderation is.

TikTok

TikTok is a short video media platform that has emerged in recent years. Users can create and publish different kinds of videos and photos on the platform, most of which are within one minute. Compared with other mainstream social media, Tiktok’s user group is relatively young. According to data, more than 60% of TikTok users were born between 1997 and 2012 (J Zeng, D Kaye. 2022). As a platform for videos and photos, TikTok is a better platform for spreading illegal information. Compared with text, video content is more difficult for the system to automatically identify whether its content is illegal. In India, Indonesia, and other countries, TikTok has been temporarily or permanently disabled due to this issue. TikTok makes a huge effort in content moderation. Implement recommendation algorithms to provide users with personalized browsing and reduce the appearance of the offending content, which provides users with highly personalized content based on individual user interests and usage data. For example, if I have browsed a video about basketball and left a comment or liked it, the system will give me more basketball-related videos. Personalized content is provided by calculating the user’s preference, which greatly reduces the playback rate of illegal content and strengthens the review of content. The platform also engages in social justice movements by pushing the creator community and users, for example, in 2021, the Chinese version of TikTok launched a campaign to cultivate and motivate platform-wide by advocating for users and creators to use the ‘positive energy’ hashtag Produce more appropriate, positive and good content within. Tiktok also monitors the content posted by other users by suggesting features such as ‘like’, ‘dislike’, ‘approve’ and ‘disapprove'(J Zeng, D Kaye. 2022). If a certain content is complained about by multiple people, the platform will deal with it, delete the content or block the publisher.

How to improve the efficiency of platform content moderation?

1.The Australian Government’s Practice

The Australian government enacted a new law in 2021 called the Online Safety Act 2021, which makes Australia’s online safety laws broader and stronger, giving eSafety greater rights to drive. Australia’s eSafety commissioner has become the world’s first online safety regulator(Julie, I. G. 2021). The Bill was set up to ensure that all Australians are safer when using online services. At the same time, the bill also requires service providers to proactively protect people from online abuse and expand protections for children and minors on social platforms. Thereby reducing the occurrence of bullying, bloody violence, and racism. The bill also calls for new rules for the online platform provider industry to monitor and remove illegal content such as underage violence, child abuse, and terrorism. eSafety reserves the right to require suppliers to block and remove violent, terrorist acts. Online platform providers can also be asked to explain and demonstrate how they meet all basic online security expectations (OSA, 2021).

2.EU-guided content moderation mechanism

Bellanova, R., & Goede, M. (2021). Introduced in the article, in November 2020, European Council President Charles Michel, after inspecting the terrorist attack in Vienna, stressed: the need for laws and tools, as soon as terrorism emerges on the Internet platform, delete these contents as soon as possible. Although many social platform companies already regulate and remove harmful, illegal content and material, etc. posted by users. In Europe, both technical and legal mechanisms work together to regulate and censor content. Technological mechanisms are generated by users in an interactive network through shared alarms and digital consultations. Human-computer interaction is the core of this technology. It is simply understood that users can complain and block the content of the platform, and the platform system will review the content. The legal mechanism establishes relevant laws for the country to require and guide platforms to censor or delete online content. The platform’s ‘community guidelines’ are also established and set under the guidance of the law. Under the guidance of the legal mechanism, the handover mechanism becomes very convenient, and law enforcement agencies will report illegal or suspicious content to the platform, and then request the platform to identify and delete it. Under the action of the government, the content review work of the platform has been greatly improved, and users have gained greater security and convenience.

Conclusion

With the rapid development of the Internet and the increase in the number of users, people increasingly rely on the Internet to obtain and publish information. But this also involves whether it meets legal requirements and violates the bottom line of morality, which shows the importance of whether the platform is reasonable and complete to review the content. From the several examples mentioned in this blog, it is not difficult to find that the current major platforms are lacking and insufficient in content auditing. Governments and major platform companies should cooperate to jointly construct a more complete content moderation and supervision system, and strive to build a better Internet environment, to prevent criminals from conducting activities through social platforms, protect the safety of people’s lives and property, and promote Internet users. Can have a good network experience. Platforms also need to strengthen their understanding of certain concepts, such as what is pornography and what is racism.

Reference list

1. Matamoros-Fernández, A. (2017). Platformed racism: the mediation and circulation of an Australian race-based controversy on Twitter, Facebook and YouTube. Information, Communication &Amp; Society, 20(6), 930-946. doi: 10.1080/1369118x.2017.1293130

2. Julie Inman Grant. (2021). Australia’s eSafety Commissioner targets abuse online as Covid-19 supercharges cyberbullying. (2021). Blog.

3. Online Safety Act 2021.

4. West, S. (2017). Raging Against the Machine: Network Gatekeeping and Collective Action on Social Media Platforms. Retrieved 8 April 2022, from https://www.cogitatiopress.com/mediaandcommunication/article/view/989/989

5. Bellanova, R., & Goede, M. (2021). Co‐Producing Security: Platform Content Moderation and European Security Integration. JCMS: Journal of Common Market Studies. doi: 10.1111/jcms.13306

6. J Zeng, D Kaye. (2022). From content moderation to visibility moderation: A case study of platform governance on TikTok