Introduction

In recent years, the influence of the Internet has been increasing. It became an important way of disseminating information and challenged traditional models of government control over the media. Around 3 billion people worldwide use the internet, which is double the number of users five years ago (Aranda Serna & Belda Iniesta, 2018).

Gaspar and Dieckmann (2021) argue that the harm caused by unregulated online freedom in the time of COVID-19 is more serious than in the past. Growing online hate, fake news is forcing social media platforms to abandon their laissez-faire approach and take responsibility for online content.

Today, there is still debate over whether platforms have a “duty of care” towards their users.

- What is the “duty of care”?

Sauter (2019) believes that the duty of care is not just a negative duty to abstain from infringing but also a duty to take positive steps in order to avoid such an infringement occurring.

To avoid the risks posed by online harm, The Online Harms White Paper recommends imposing a new statutory duty of care on companies that facilitate the sharing or discovery of user-generated content (Woods, 2019).

The UK Government’s Draft Online Safety Bill argues that platforms play a role in the realization of cyber hazards. It also recognises that harmful behaviour is broader than criminal behaviour on the Internet, by imposing duties of broad applicability for not only illegal content, but harmful content. It requires platforms to conduct risk assessments to determine their role in spreading harmful information.

Social media’s stewardship of Trump’s misleading posts

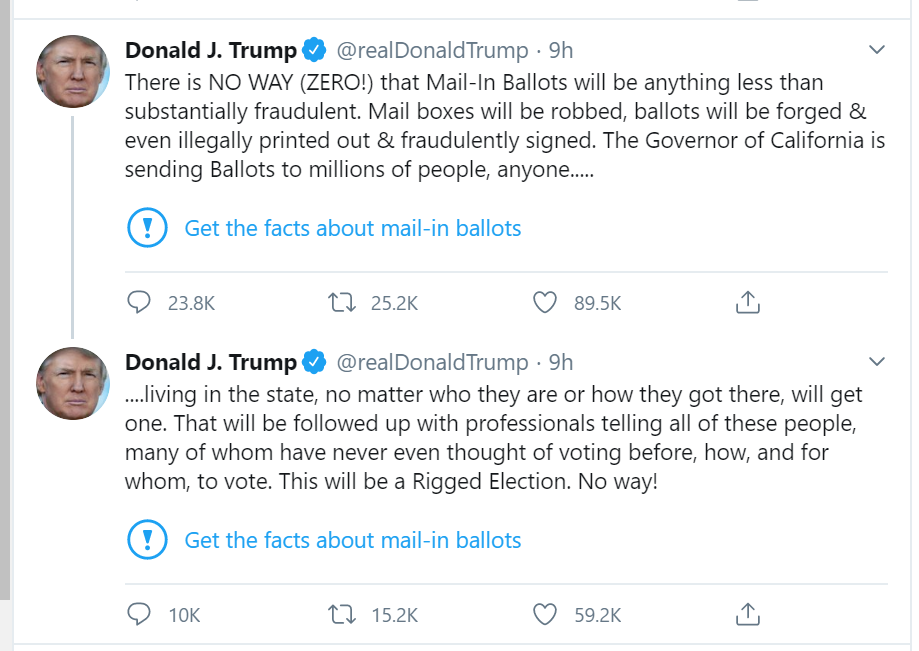

Facebook and Twitter put warning labels on multiple posts by President Donald Trump, according to Reuters. The aim is to reduce the incitement and other negative effects of these fake news on users. The posts falsely claimed Trump’s victory in the U.S. presidential election and made baseless allegations about the legality of vote counting.

However, there are differences in the management measures taken by the two platforms in response to Trump’s posts. Twitter labeled tweets “misleading” and restricted how they can be shared and viewed. Facebook labeled more posts, but did not describe the information as misleading or restrict how it could be shared or viewed.

Figure2: Screenshot of Trump’s posts

After the attack on the Capitol, Trump was permanently banned from Twitter and suspended from Facebook “indefinitely.” Many other platforms have also imposed restrictions on Trump’s online speech, with some banning Trump’s account entirely and some using other methods to prevent extremist Trump supporters from spreading fake news about voter fraud and hate speech , to prevent them from planning further riots.

Freedom of opinion vs duty of care

According to Reuters, German Chancellor Angela Merkel’s spokesperson said Merkel believed the right to freedom of opinion is of fundamental importance and that any restrictions on freedom of speech should be decided by the government, not private companies.

The social media platform’s ban on Trump’s account has also drawn the ire of other European politicians, including U.K. Health Secretary Matt Hancock and EU Commissioner for Internal Market Thierry Breton. They say this raises key questions about the power of tech companies and the need for regulation. They argue that social media platforms have too much power and that there are serious weaknesses in the way society is organized in the digital space.

For the platform, Trump, as the opinion leader, has huge influence. And his baseless posts will influence the judgment of other users and incite users to extreme behavior. And Trump, after being warned, still publishes posts that violate the Glorification of Violence Policy. Twitter said the tagging was fulfilling its “duty of care” to users, providing supplemental information to other users and helping users make rational judgments about these posts. The purpose of banning accounts is to maintain the network security of other users and protect the interests of most people.

Several celebrities have been calling for Trump to be banned on Twitter for years. Former first lady Michelle Obama said the Silicon Valley companies should stop enabling this monstrous behavior and go even further than they have by permanently banning this man from their platforms and putting in place policies to prevent their technology from being used by the nation’s leaders to fuel insurrection.

Whether the platform should fulfill its “duty of care” to their users?

- Platform: The platform has no “duty of care” and hopes to reduce management

For the platform itself, it prefers less management. Not only because less governance leads to less spending on governance, but also because inflammatory fake news and hate speech can spark more discussion, increase interactions on the platform, and bring more benefits to the platform. As a result, many internet platforms claim to be tech companies, arguing that they only provide a space for users to express their opinions and communicate with each other, rather than authors of hate speech (Flew, 2021). Platforms believe they are not responsible for inappropriate speech by users.

- Scholars: The platform has the “duty of care”, and the advantages of management outweigh the disadvantages

However, some scholars believe that reasonable management brings more advantages than disadvantages to the platform. Because online harm has a negative impact not only on the individual user but also on the platform. Strict management can make the online environment of the platform more harmonious, improve the user experience, and attract more users to use the platform.

At the same time, some scholars believe that the platform should make necessary management of the online space. They say owners of online spaces should have the same duty of care to users who use that space as owners of physical spaces. They need to ensure that these users are safe and harmless in the spaces they operate and control. But some scholars argue that online space owners have entirely different responsibilities than physical space owners. Because online space owners are not responsible for the characteristics of that space, but for what others do in that space (Price, 2021).

The Reason and Purpose of Platform Management

The aim of this series of management measures against Trump is to avoid incitement to violence and to stop the spread of false information.

- The difference between new media and traditional media

Traditional media will be more rigorous than new media. Traditional media have more social responsibility and they represent more authoritative information. Reporters and editors are held accountable for wrong reporting. And the threshold for becoming a reporter and editor is higher than the threshold for becoming an Internet user. The reporting process of traditional media is also more complicated, requiring a comprehensive investigation and a strict review process before publication.

The new media has the characteristics of anonymity. According to Goffman (1973) ‘s theory of human interaction and behaviour, people play roles that the society needs in real life. But online they are more likely to express authentic, radical views because online speech doesn’t affect their real life. At the same time, many new media information is fragmented, such as a few sentences on Twitter or a few seconds of short videos on Tik Tok. It is difficult for users to get the whole picture of things from such information, and it is easy to be misled by unfair and false information.

Therefore, new media are more likely to produce fake news and hate speech than traditional media, and require stricter management.

Figure6: Traditional Media vs. New Media

The role of existing platform management

- Labels do little

In fact, Facebook’s warning label for Trump’s baseless remarks didn’t work as expected. These labels do little to reduce the spread of false content on the platform.

Facebook said putting a warning label on Trump’s posts would reduce their retweets by about 8%, but since Trump’s posts have a large base, an 8% reduction wouldn’t change the order of magnitude. And many users quickly learn to ignore these tags.

- After being banned, Trump creates his own social media platform

According to ABC News, a year after Twitter banned Trump’s account, Trump launched his own social media, Truth Social.

Trump and the conservative media argue that the social media industry dominated by a handful of tech giants harms users’ freedom of speech. By creating Truth Social, Trump hopes to give people back their voices and get the millions of people who follow him on Twitter to support Truth Social.

Figure7: Trump creates social software Truth Social

How to fulfill the “duty of care”

- Platforms need to find a balance between protecting freedom of speech and maintaining platform order

If the platform management is too strict, it will infringe on the freedom of speech of users, leaving only one voice on the Internet platform. If the platform management is too loose, it will not be able to play a role, and cyber violence and fake news on the platform will continue to increase, affecting the user experience. Svensson (2017) argues that freedom of speech is directed at privacy, personal integrity, and human dignity, and should not be used as an excuse for offense, hate, threat, harassment, and stalking.

- Platforms need to ensure fairness in management standards

The internet itself is not biased, but the people who create the platforms have biases.

Today, politics has taken notice of the importance of the Internet.Many politicians present their positions and share their viewpoints on current political events on social media platforms such as Twitter or Facebook (Busch et al., 2017).

Therefore, social media platforms are easily affected by political, economic and other factors, and they will have a certain tendency when establishing auditing standards. In order to ensure fairness, the platform should establish standards based on legal and moral consensus, and accept the supervision of users.

Conclusion

The platforms’ handling of Trump’s posts has demonstrated their management standards. The incident has also sparked discussions about how to protect free speech on social media platforms dominated by tech giants.

Does the platform have a “duty of care” for users? What aspects should the platform’s “duty of care” involve? What management standards can effectively and fairly maintain the network environment? These questions are worth discussing.

In my opinion, platforms have the “duty of care” for users, and they need to take corresponding measures to control online hate speech and fake news according to the characteristics of the platform. However, when formulating management rules, it is necessary to strike a balance between platform management and freedom of speech. In order to prevent the platform from abusing its power, international organizations with no interest should be allowed to formulate unified management standards.

Because of the volatility of the Internet, legislation often lags behind online problems. But that doesn’t mean the current legislation is useless. It is necessary to build a new legal system based on the characteristics of the Internet and freedom of speech (Aranda & Belda, 2018). The legal system of the future will allow for the incorporation and regulation of freedom of speech in the Internet and provide solutions to conflicts that may arise.

Reference

Anadolu Agency, Getty Images. (n.d.). German Chancellor Angela Merkel wears a protective face mask as she leaves after speaking to the media for her annual summer press conference during the coronavirus pandemic on August 28, 2020 in Berlin, Germany. https://image.cnbcfm.com/api/v1/image/106723069-1601454131455-gettyimages-1228236457-aa_28082020_147369.jpeg?v=1601454167&w=740&h=416&ffmt=webp

Aranda Serna, F. J., & Belda Iniesta, J. (2018). The delimitation of freedom of speech on the Internet: The confrontation of rights and digital censorship. ADCAIJ: Advances in Distributed Computing and Artificial Intelligence Journal, 7(1), 5–12. https://doi.org/10.14201/adcaij201871512

Bullingham, L., & Vasconcelos, A. C. (2013). ‘The presentation of self in the online world’: Goffman and the study of online identities. Journal of Information Science, 39(1), 101–112. https://doi.org/10.1177/0165551512470051

Busch, A., Theiner, P., & Breindl, Y. (2017). Internet censorship in liberal democracies: Learning from autocracies? In Managing Democracy in the Digital Age (pp. 11–28). Springer International Publishing. http://dx.doi.org/10.1007/978-3-319-61708-4_2

Dwoskin, E. (2020, May 29). Twitter’s decision to label Trump’s tweets was two years in the making. The Washington Post. https://www.washingtonpost.com/technology/2020/05/29/inside-twitter-trump-label/

Flew, Terry. (2021). Regulating Platforms. Cambridge: Polity, Ch. 2.

Gaspar, C., & Dieckmann, A. (2021). Young but not Naive: Leaders of Tomorrow Expect Limits to Digital Freedom to Preserve Freedom. NIM Marketing Intelligence Review, 13(1), 52–57. https://doi.org/10.2478/nimmir-2021-0009

Goffman. (1973). The presentation of self in everyday life. Overlook Press.

Goffman, E. (2003). On face-work: An analysis of ritual elements in social interaction. Reflections: The SoL Journal, 4(3), 7–13. https://doi.org/10.1162/15241730360580159

INFORRM. (n.d.). Duty of Care. https://i0.wp.com/inforrm.org/wp-content/uploads/2019/03/dutyofcare.jpg?ssl=1

Jakub Porzycki, NurPhoto, Getty Images. (n.d.). Trump is banned. https://i.pcmag.com/imagery/articles/03k7RjT28lJgYttZoGxYYuJ-1.fit_lim.size_1600x900.v1639329566.jpg

Jiupai News. (n.d.). Trump creates social software Truth Social. https://n.sinaimg.cn/sinakd20112/138/w600h338/20220222/158d-d4f54f06ef48d85a138efd19d8c74cfc.jpg

kaspersky. (n.d.). Fake news. https://www.kaspersky.com.cn/content/zh-cn/images/repository/isc/2021/how-to-identify-fake-news-1.jpg

Octagon media. (n.d.). Traditional Media vs. New Media. https://static.wixstatic.com/media/dfa148_1cb1925f7d4444d08102bb4902ed1d7e~mv2.png/v1/fill/w_740,h_378,al_c,q_90/dfa148_1cb1925f7d4444d08102bb4902ed1d7e~mv2.webp

Price, L. (2021). Platform responsibility for online harms: Towards a duty of care for online hazards. Journal of Media Law, 13(2), 238–261. https://doi.org/10.1080/17577632.2021.2022331

Sauter, W. (2019). A duty of care to prevent online exploitation of consumers? Digital dominance and special responsibility in EU competition law. Journal of Antitrust Enforcement, 8(3), 649–649. https://doi.org/10.1093/jaenfo/jnz035

Schwanholz, Graham, T., & Stoll, P.-T. (2017). Managing Democracy in the Digital Age: Internet Regulation, Social Media Use, and Online Civic Engagement. Springer International Publishing AG. https://doi.org/https://doi.org/10.1007/978-3-319-61708-4

Scott, S. V., & Orlikowski, W. J. (2014). Entanglements in practice: performing anonymity through social media. MIS Quarterly, 38(3), 873–893. https://doi.org/10.25300/misq/2014/38.3.11

Svensson, G. (2017). Social media as civic space for media criticism and journalism hate. In Managing Democracy in the Digital Age (pp. 201–221). Springer International Publishing. http://dx.doi.org/10.1007/978-3-319-61708-4_11

Tambini, D. (2019). The differentiated duty of care: A response to the Online Harms White Paper. Journal of Media Law, 11(1), 28–40. https://doi.org/10.1080/17577632.2019.1666488

Todd Spangler. (n.d.). Screenshot of Trump’s posts. https://variety.com/wp-content/uploads/2020/05/twitter-trump-false-claim-mail-in-ballot.png

Washington Post illustration; Jabin Botsford for The Washington Post, AP, Getty and iStock. (n.d.). Illustration for Tech Social Ban. https://www.washingtonpost.com/wp-apps/imrs.php?src=https://arc-anglerfish-washpost-prod-washpost.s3.amazonaws.com/public/IZ3BVIC2KZFBNITQVDPEBSHBOA.jpg&w=691

Woods, L. (2019). The duty of care in the Online Harms White Paper. Journal of Media Law, 11(1), 6–17. https://doi.org/10.1080/17577632.2019.1668605