Introduction

As the development of communication technology, internet is increasingly becoming an important channel for people to obtain information. It seems that getting news through various news applications and social media platforms has become the norm in lives of people. Compared with traditional mass media such as TV and newspapers, those news applications and social media platforms provide users with personalized content to better meet the individual needs of users. What supports this special service is the algorithm behind the precise push mechanism. Computation, information analysis and reckoning, the algorithm is a criterion and mechanism created based on the above behaviors (Flew, 2021). Through the collected user data such as browsing records and like records, the algorithm will calculate user preferences and formulate personalized push content to meet user needs. This process will continue to cycle to continuously improve the personalized push mechanism. However, this kind of personalized content customization is not without risk, and users have the possibility of falling into filter bubbles when they enjoy the convenience of the push mechanism. According to Just and Latzer (2017), some certain personalized algorithms may lead to the creation of filter bubbles. In this age of personalization algorithms, filter bubbles and the problems they can cause need to be taken seriously.

Hence, this blog will be based on filter bubble this special network phenomenon, analyzing the result of opinion polarization brought by it, and seeking possible solutions. The author will start with a brief explanation of filter bubble, then the attention will be moved to the specific case, which is the mass confrontation caused by talk show host Yang Li on the Chinese social media platform Weibo. Finally, the author will suggest a mechanism for preventing filter bubbles and polarization based on media companies and third-party organizations through the case of how Facebook and Allsides deal with filter bubbles.

Filter Bubbles and Opinion Polarization

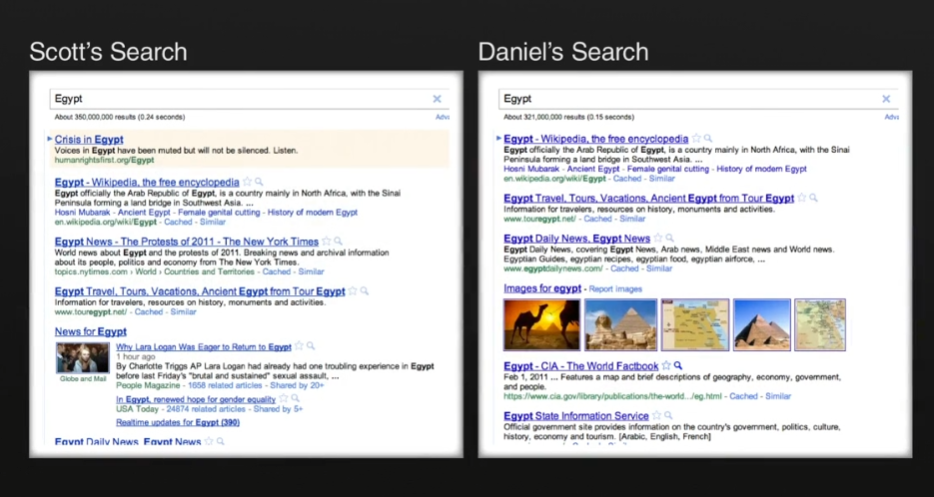

The concept of filter bubble was first proposed by Pariser, he argued that personalized content push will limit the diversity of media content people are exposed to, make people fall into their own bubbles and have a negative impact on individuals and society, which is not conducive to the development of democracy and the health of the public sphere (Pariser, 2011). Therefore, filter bubble can be considered as a self-circulating process. In this process, personalized algorithms infer user preferences by collecting and analyzing user data, push the content of interest to users according to their preferences, all dissenting information is blocked, users can only receive homogeneous, repetitive content (Prakash, 2016).

However, the academia has not reached a unified conclusion on the existence and impact of filter bubbles. There is an ongoing debate about whether filter bubble really limits the diversity of information. Some scholars claimed that social media and search engines increase the likelihood that users will discover information that differs from their political views (Flaxman, Goel & Rao, 2016). Nevertheless, other academics declared that in the field of online politics, users rarely have the opportunity to receive information that differs from their political views, while they have a high chance of learning ideas that are consistent with their opinions (Wojcieszak & Mutz, 2009). In general, although it cannot be defined as a clear concept, filter bubble can indeed be used to describe specific phenomena that exist on the Internet.

While the filter bubble controversy persists, the existence of polarization is unambiguous (Andrejevic, 2019). The polarization of opinion is widespread in cyberspace and cannot be ignored. Opinion polarization occurs when differences of opinion increase (Dandekar, Goel & Lee, 2013). In this process, personalized algorithms also play a role in fueling the flames. According to Kitchens, Johnson and Gray (2020), filter bubble not only limits the sources of information for users, resulting in a lack of diversity in the information they receive, but also leads to ideological fragmentation and polarization of opinion. Though not the sole cause of opinion polarization, filter bubble is inextricably linked to this phenomenon.

Polarization of Feminism on Weibo

This case on Weibo, feminist Yang Li endorses Mercedes-Benz, illustrates how a filter bubble triggered by personalization algorithm affects attitudes of users, ultimately leading to opinion polarization. Yang Li, a talk show star known on the Chinese internet for her outspoken feminist rhetoric and poignant taunts against patriarchy, has more than two million followers on her Weibo account, no doubt one of the Internet celebrities. On October 14, 2021, the official Weibo account of Mercedes-Benz released a video featuring Yang Li as the spokesperson, which caused great controversy. In the comments section of the video, there was great polarization. A large number of users commented on Yang Li and said that they would no longer choose to buy Mercedes-Benz products, and even insulted Yang Li. At the same time, there were also many messages supporting Yang Li, and the two sides fell into a scolding battle (Lu, 2021).

It is certainly an example of polarized views on feminism. The reason why Yang Li was sought after by her fans and opposed and insulted by a large number of users is because she is regarded as a representative of feminism. Therefore, the reasons behind the polarization caused by this video are very complex, involving the increasingly acute issue of confrontation between male and female in China. Nevertheless, recommendation mechanism of Weibo and the filter bubbles it triggers also played an important role. According to a study, Weibo has the typical phenomenon of filter bubbles, the filtering of Weibo’s algorithm narrows the range of interests of users and users receive fewer messages that contradict their opinions (Li, Mithas, Zhang & Tam, 2019).

In this way, it seems that it is not difficult to understand why Weibo users have polarized their opinions on Yang Li’s endorsement of Mercedes-Benz. The personalization algorithm of Weibo will filter the content according to the preference of users and selectively push it to the user. For those who supported Yang Li, because of their feminist tendencies, the content they browsed every day is biased towards feminism, which may contain content that beautifies Yang Li, and all information that is not conducive to feminism was blocked by the algorithm. Correspondingly, for those who opposed Yang Li, their browsing preferences may be traditionally male-oriented, the messages pushed to them are unlikely to contain positive information about feminism, and may even have negative messages about extreme feminism, for instance, Yang Li’s snarky mockery of traditional patriarchy and male. The algorithm of Weibo will continue to push – collect data – analyze data – push, and improve the personalized push mechanism for different users. During this process, those different opinions were excluded, and the same voices were repeated constantly. Whether they were supporters or opponents of Yang Li, they rarely had the opportunity to come into contact with opinions different from their own. Eventually, two groups of very different ideas appeared, and polarization emerged.

The above case illustrates how the personalization algorithms of social media platforms can create filter bubbles that ultimately lead to the polarization of opinion of users. Personalized algorithms are not completely evil. In the current information explosion, this special mechanism is considered to be an effective method to prevent users from falling into information overload (Yan, Zeng & Zhang, 2021). However, in this process, the overuse of recommendation algorithms may lead to a narrowing of the range of information received by users and cause bad social impact. Just like the extreme opposition formed around Yang Li in the case, this polarized atmosphere has a negative impact on the neutral atmosphere that cyberspace should maintain. While the filter bubbles generated by personalized algorithms may not be the only reason for such terrible results, it is undeniable that filter bubbles contribute to the polarization of cyberspace. Therefore, some measures must be taken to reduce the generation of filter bubbles, thereby mitigating the phenomenon of polarization.

Burst Filter Bubbles

With the popularity of personalization algorithms, how to avoid getting trapped in filter bubbles is a question worthy of study. Some people focus on users themselves, Prakash (2016) claimed that the public can clean up their browsing history in time to prevent personalization algorithms from speculating about user preferences. Although it is true that individuals can prevent themselves from falling into the filter bubble by this method, it is obviously not conducive to the convenience of users to enjoy the Internet. Compared with users, as developers and providers of personalized algorithms, media companies and social media platforms should take on the responsibility of reducing filter bubbles. Fortunately, there are pioneers who are already addressing this algorithmic problem. The author will present one specific case to illustrate how social media platforms use technology to avoid filter bubbles.

As one of the most famous social media platforms in the world, Facebook claims that it has been working to connect the masses widely. However, during the 2016 US presidential election, Facebook has been criticized for its filter-bubble-like push mechanism, whose customized feeds further divide the conservative and liberal camps. Facebook users have become polarized in the presidential election, with Trump supporters and opponents sharply confrontation (Solon, 2016). Recommendation mechanism of Facebook was seen before the election as a danger of creating a filter bubble. In one study, data on more than 10 million Facebook users was used to investigate whether there were filter bubbles, and it turned out that Facebook’s existing personalization algorithms narrowed the range of information users could reach (Bakshy, Messing & Adamic, 2015).

In response to the filter bubble, Facebook began to take two major steps in early 2017. First, personalization for trending topics has been eliminated, all users will see the same trending topics list. Facebook believes this will prevent users from missing out on important information that won’t appear on their recommended list, ensuring that users’ access to information will not be limited (Newton, 2017). In addition, Facebook has also improved their mechanism for related articles. The improved push mechanism no longer pushes articles with similar opinions repeatedly, but adds more articles with different viewpoints to ensure that users understand issues from different perspectives (Su, 2017).

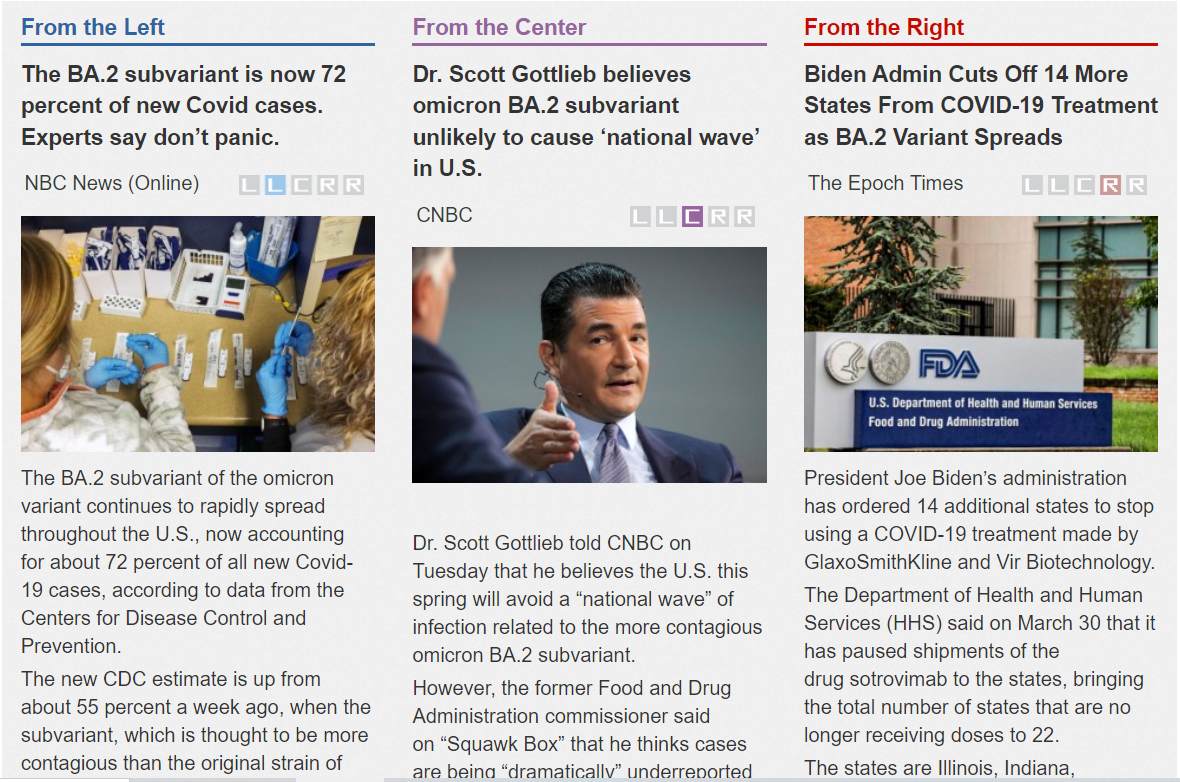

Clearly, Facebook tends to reduce the filter bubble by improving the algorithm through technical means to provide users with diverse content. However, technical improvements from social media platforms alone are not enough to actually solve the problem. In addition to social media companies, some third-party organizations are also aware of the potential dangers of filter bubbles and are taking action. Allsides is a website dedicated to bringing objective information to the masses to burst the filter bubble. Allsides does not produce news itself, but integrates news from different angles of different media, categorizing them according to their viewpoints. Through this approach, readers will have the opportunity to gain a comprehensive understanding of different perspectives on the same issue, broadening the range of information received (Evangelista, 2012). In addition, through the classification of Allsides, readers can also recognize the tendencies of different media and then find potential filter bubbles, which actually acts as a supervisory role.

The above two cases respectively demonstrate how social media companies and third-party organizations can reduce the creation of filter bubbles to relieve the polarization of opinion. The author argues that they reflect the possibility of some kind of mechanism to govern filter bubbles: the collaboration between media companies, social media platforms and third-party organizations. While media companies and social media platforms improve personalization algorithms to reduce filter bubbles at the source, third-party organizations like Allsides can serve as an effective social oversight mechanism to feed back news bias and indicate to users whether bubbles exist. This cooperative mechanism based on technological improvement and social supervision may effectively reduce filter bubbles and the possibility of polarization.

Conclusion

In summary, personalized algorithms that help users avoid information overload can also trigger filter bubbles, which can eventually lead to polarization. Through the case of Weibo users about the controversy of feminist Yang Li, it can be found that the polarization created by the bubble caused by recommendation algorithm has brought great harm to the cyberspace. Therefore, the author analyzes the cases of Facebook and Allsides and attempts to propose a mechanism for media companies and social media platforms to cooperate with third-party organizations to manage the special phenomenon of filter bubbles. Allsides has formed a relatively complete classification system for mainstream news, but this classification standard is more aimed at major news media. For social media, the huge amount of information and complex content components are difficult to classify through the existing system to reflect the potential filter bubble. There is still a long way to go to truly realize this kind of cooperation mechanism.

References List

Andrejevic, M. (2019). Automated Media. Milton: Routledge.

Bakshy, E., Messing, S., & Adamic, L. A. (2015). Exposure to ideologically diverse news and opinion on Facebook. Science (American Association for the Advancement of Science), 348(6239), 1130–1132. https://doi.org/10.1126/science.aaa1160

Dandekar, P., Goel, A., & Lee, D. T. (2013). Biased assimilation, homophily, and the dynamics of polarization. Proceedings of the National Academy of Sciences – PNAS, 110(15), 5791–5796. https://doi.org/10.1073/pnas.1217220110

Evangelista, B. (2012, August 26). AllSides compiles varied political views. Retrieved from https://www.sfgate.com/business/article/AllSides-compiles-varied-political-views-3815821.php

Flaxman, S., Goel, S., & Rao, J. M. (2016). “Filter Bubbles, Echo Chambers, and Online News Consumption.”. Public Opinion Quarterly, 80(1), 298–320. https://doi.org/10.1093/poq/nfw006

Flew, T. (2021). Regulating Platforms. Cambridge: Polity.

Just, N., & Latzer, M. (2019). ‘Governance by algorithms: reality construction by algorithmic selection on the Internet’. Media, Culture & Society 39(2), pp. 238-258.

Kitchens, B., Johnson, S., & Gray, P. (2020). Understanding Echo Chambers and Filter Bubbles: The Impact of Social Media on Diversification and Partisan Shifts in News Consumption. MIS Quarterly, 44(4), 1619–1650. https://doi.org/10.25300/MISQ/2020/16371

Li, K. G., Mithas, S., Zhang, Z., & Tam, K. Y. (2019). Does Algorithmic Filtering Create a Filter Bubble? Evidence from Sina Weibo. Academy of Management Proceedings, 2019(1), 14168. https://doi.org/10.5465/AMBPP.2019.14168abstract

Lu, Y. (2021, October 19). Yang Li was complained about being suspected of being an endorsement of Mercedes-Benz. Retrieved from https://baijiahao.baidu.com/s?id=1714055484426505128&wfr=spider&for=pc

Newton, C. (2017, January 25). Facebook ends its effort to personalize Trending Topics. Retrieved from https://www.theverge.com/2017/1/25/14386616/facebook-trending-topics-personalization-publisher-names

Pariser, E. (2011). The Filter Bubble: What the Internet Is Hiding from You. New York: Penguin Press.

Prakash, S. (2016). Filter Bubble: How to burst your filter bubble. International Journal of Engineering and Computer Science, 5(10), 18321-18325.

Solon, O. (2016, November 10). Facebook’s failure: did fake news and polarized politics get Trump elected? The Guardian. Retrieved from https://www.theguardian.com/technology/2016/nov/10/facebook-fake-news-election-conspiracy-theories

Su, S. (2017, April 25). New Test With Related Articles. Facebook. Retrieved from https://about.fb.com/news/2017/04/news-feed-fyi-new-test-with-related-articles/

Wojcieszak, M. E., & Mutz, D. C. (2009). Online Groups and Political Discourse: Do Online Discussion Spaces Facilitate Exposure to Political Disagreement? Journal of Communication, 59(1), 40–56. https://doi.org/10.1111/j.1460-2466.2008.01403.x

Yan, J., Zeng, Q. & Zhang, F. (2021). Summary of Recommendation Algorithm Research. Journal of Physics, Conference Series, 1754(1), 12224–12229. https://doi.org/10.1088/1742-6596/1754/1/012224