Bias is an unfounded negative attitude towards a person or a group, while discrimination is an action that reflects prejudice. Both represent the superiority of some specific classes and make most people uncomfortable.

Nowadays, with the development of science and technology, human society has entered the era of big data, and the algorithm has become an important technology in many fields. However, it is worrying that biases and discrimination have not disappeared with the progress of society and technology, and they are still hidden in the algorithm. Wrong recognition of black facial information as gorilla (Haddad, 2021); The results of searching coloured people tend to be negative; These results show that the bias and discrimination in the algorithm need to be taken seriously.

Why discrimination is hidden in the algorithm?

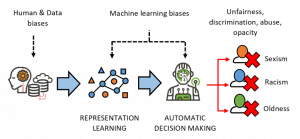

The algorithm represents a series of complete instructions to solve the problem. Usually, the corresponding results can be obtained in a limited time for a certain standard input. The causes of discrimination in algorithms can be simply divided into the current technical defects and the discriminatory ideas of algorithm designers.

Technical defects

Technical defects are often due to the differences and contradictions between the universality of the public and the particularity of a few. The algorithm calculates and obtains the results according to the fixed logic specified by the designer, but when encountering some events with low probability or situations that do not exist in the set value, the algorithm may make errors or ignore these special situations to obtain the results, which leads to discrimination against a small number of groups.

Subjective prejudice

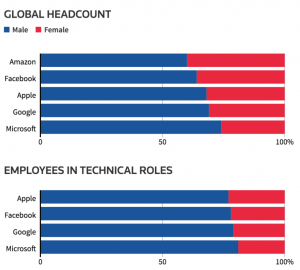

On the other hand, the discrimination thought of the algorithm designer is added to the algorithm program, which belongs to the cognitive bias of the designer. The algorithm discrimination caused by this behaviour is mainly due to the imbalance of the composition of the current algorithm designer group. Although some minority students graduate from computer-related majors every year, there seems to be insufficient employment for blacks and Latinos.

Photo from: https://sensitivenets.com/what/

In 2016, the Black Girls Code organization announced that it would move to Google’s New York office (Noble, 2018). The purpose of this action is to gradually correct the long-standing industry exclusion by joining minorities. Admittedly, the discriminatory ideas of some algorithm designers are often caused by their education and long-term immersion culture, and it is very difficult to correct this phenomenon in a short time. However, it can be predicted that part of the reason for algorithm discrimination is the subjective cognitive bias of the designer, which is not a necessary stage in the process of algorithm development. Therefore, algorithmic discrimination has a chance to be eliminated in the future.

The stereotype of black girls

Most cases of algorithmic discrimination are targeted at ethnic minorities. Noble (2018) conducted a survey on the search results of the Google algorithm, and the results were very shocking. When black girls are entered into the search bar for retrieval, the results will have information related to pornographic words, including some overly straightforward words. This seems to be a stereotype of black girls, who are excessively pornographic. This algorithm discrimination can be attributed in part to the lack of seats for black women in Google’s algorithm designers, but it is undeniable that there may be some discrimination that reflects the thoughts in reality.

Note: Discriminatory results from Google search for black girls

Photo from: https://time.com/5209144/google-search-engine-algorithm-bias-racism/

Deviation of face recognition algorithm

Such a situation is not uncommon. Google photos even had inaccurate facial recognition of blacks in 2015, which led to the recognition of gorillas. The accuracy of this face recognition algorithm is quite high for light-skinned people, but it doesn’t seem to be so friendly for dark-skinned people. A team has found that face matching will indeed be affected by users, and even affect its accuracy (Howard et al, 2020).

Gender discrimination in algorithm

In addition, algorithmic discrimination in job hunting has also hurt some groups (Todolí-Signes, 2019). In the recruitment algorithm designed by Amazon, reported by Reuters in 2018, women’s identities were discriminated against them in the job search process, even failing. When the algorithm detects the words related to women in the resume, it will reduce the importance of their resume, and even degrade their resume.

Note: Women are discriminated against in the workplace

Photo from: https://www.lever.co/blog/what-are-the-best-ways-to-address-ai-bias-in-recruiting/

Such algorithmic discrimination is undoubtedly a kind of damage to the interests of women groups, resulting in social injustice and negative impact (Schroeder, 2021). In the workplace, except for some special jobs, gender should not be considered and distinguished in the recruitment process of most jobs. The algorithm exists in workplace recruitment. It should help companies and job seekers improve efficiency as much as possible and quickly match suitable positions and personnel, rather than reflect the idea of prejudice and discrimination in this process.

Deviation in crime rate identification

The field of algorithmic discrimination is also reflected in the recognition of the crime rate. If two criminals get out of prison at the same time, one is black and the other is white, the probability of the algorithm identifying the two criminals to commit a crime again will be much higher for blacks than whites. In the middle of 2016, the COMPAS algorithm in the American States found such a rule after investigation (Brackey, 2019). This situation will even affect the issue of judicial justice because some people will be arrested or detained because of the wrong algorithm results. This has to make people consider whether there is an imbalance in the proportion of algorithm designers. Will this happen if the proportion of people of colour increases?

Objectively, algorithmic decision-making is when human beings give the right of fair choice to the computer. Although the computer algorithm sounds like an objective decision-maker based on data, the algorithm is still formulated by people, which is difficult to avoid the subjectivity of the setter.

Impacts of algorithm discrimination

Algorithmic bias infringes on the interests of individuals or groups

The negative impact of algorithm discrimination can be foreseen. The first is that it will affect social equity. When some groups are biased and discriminated against by the algorithm, their own interests will often be infringed. No one wants to miss a job because they are discriminated against by the algorithm, or even be judged as a high-risk group for no other reason than just because of their skin colour. When the interests of discriminated individuals and even groups are infringed, people are bound to strive for their own rights and interests, which may lead to some extreme movements and affect social stability.

Algorithm discrimination affects public trust

Second, algorithm discrimination may affect the public’s trust in algorithm technology, and then hinder the development of algorithm technology. For some people without internet and computer knowledge, an algorithm is just a tool for fair calculation based on vast data and fixed logic (Burrell, 2016). It has no bias. However, if the phenomenon of algorithm discrimination occurs frequently, and even has a certain impact, the black box or opacity of the algorithm will cause public doubt (Pasquale, 2015). When the voice of doubt reaches a certain level, the development of algorithm technology will be hindered. After all, the public hopes that science and technology such as algorithms is a science and technology that really serves all people, rather than a tool for minorities or classes to respond to discrimination.

Algorithmic discrimination affects social harmony

In addition, algorithmic discrimination will also have a negative impact on social inclusion and affect the friendly relationship between various groups. When some groups are discriminated against by algorithms, a sense of exclusion from society may affect their emotions and thinking, and may even amplify this malicious guess that it comes from the majority of society.

Photo from: https://www.vox.com/recode/2020/2/18/21121286/algorithms-bias-discrimination-facial-recognition-transparency

This phenomenon has long been reflected in the discrimination caused by culture and history. Up to now, there is still some racial discrimination in American society. Although the government manages and promotes the theme of racial equality, there will still be black youth who may be searched or questioned in the streets. The bias and discrimination in the algorithm partly reflect the real problems in real society and show some hidden contradictions among various groups (Yang & Dobbie, 2020). Therefore, algorithmic discrimination may lead to the formation of estrangement between various groups, the loss of a certain sense of trust between the two sides, and then lead social instability and crowd settlement.

What can we do?

The negative impact of algorithm discrimination is predictable, so some measures are needed to try to solve this situation.

Start with data justice

First of all, we need to solve it from the causes of formation. The technical reasons can be attributed to the authenticity of data collection and the omission of a few cases in the algorithm. This needs to ensure the fairness of data as much as possible in the data collection stage, so as to reduce the probability of discrimination and biased information in the initial stage of algorithm formation.

Professional algorithm regulators and government intervention

In addition, if there are regulators of corresponding algorithms may be able to improve this situation. However, for the general public, the knowledge required to understand algorithms may be too professional to be popularized. Therefore, it is very difficult for the public to monitor whether there is discrimination and bias in the algorithm. If this work is carried out by professional Internet or computer professionals, it may reduce algorithms containing discriminatory information or remind relevant companies to make corrections (Ebers & Cantero, 2021).

Such organizations or companies may develop more rapidly if they can get the support of the government. While the government provides the database, it also improves the credibility of such organizations in the hearts of the people. This is beneficial to the elimination of algorithm discrimination and the future development of the algorithm.

Continuous supplement and improvement

For the lack of a few cases, we need to constantly improve the data and supplement the algorithm to gradually eliminate the algorithm discrimination in this case. This is the time for Internet companies to assume social responsibility. This measure requires cooperation between the company and the government of the algorithm so that the gradually improved algorithm can serve society.

Although the cost of such correction will be higher than originally expected, it will be supported by the public to a certain extent and will contribute to the future development of the algorithm from the perspective of long-term development.

Gradually change the subjective bias of algorithm designers and algorithms

The last measure needs to address the subjective bias and discrimination against the algorithm designer itself. Most of the time, the formation of this idea is not overnight, so there are some obstacles to eliminating this discrimination. However, we can reduce the discrimination of the algorithm by increasing the proportion of minority groups algorithm designers, and then correct this phenomenon through the subtle influence and the influence of the algorithm on the next generation.

The Black Girls Code organization entering Google’s New York office is an attempt to solve algorithm discrimination. Perhaps the impact of the current measures is not very large, but it is a good start and provides valuable experience for follow-up measures.

The future of algorithm technology is worth looking forward to

Due to the subjective bias of the algorithm designer and the omission of the algorithm itself, there is discrimination and bias hidden in the algorithm. Various types of algorithm discrimination not only bring harm to people but also cause instability elements in society. Many groups are committed to solving this problem and have put into practice some methods and measures. Although algorithmic discrimination still exists in the network, the possibility of eliminating this discrimination in the future is worth looking forward to.

Reference List:

- (2016). How the machine “thinks”: Understanding opacity in machine learning algorithms. Big Data & Society, 3(1), 205395171562251–. https://doi.org/10.1177/2053951715622512

- (2019). Analysis of Racial Bias in Northpointe’s COMPAS Algorithm. ProQuest Dissertations Publishing.

- Ebers, & Cantero Gamito, M. (2021). Algorithmic governance and governance of algorithms: legal and ethical challenges (1st ed. 2021.). Springer. https://doi.org/10.1007/978-3-030-50559-2

- (2021). Confronting the Biased Algorithm: The Danger of Admitting Facial Recognition Technology Results in the Courtroom. Vanderbilt Journal of Entertainment and Technology Law, 23(4), 890–.

- Howard, Rabbitt, L. R., & Sirotin, Y. B. (2020). Human-algorithm teaming in face recognition: How algorithm outcomes cognitively bias human decision-making. PloS One, 15(8), e0237855–e0237855. https://doi.org/10.1371/journal.pone.0237855

- Ma, Qin, K., & Zhu, S. (2014). Discrimination Analysis for Predicting Defect-Prone Software Modules. Journal of Applied Mathematics, 2014, 1–14. https://doi.org/10.1155/2014/675368

- Noble, S. U. (2018). Algorithms of oppression: how search engines reinforce racism. New York: New York University Press.

- Pasquale, Frank (2015). ‘The Need to Know’, in The Black Box Society: the secret algorithms that control money and information. Cambridge: Harvard University Press, pp.1-18.

- (2021). Reinscribing gender: social media, algorithms, bias. Journal of Marketing Management, 37(3-4), 376–378. https://doi.org/10.1080/0267257X.2020.1832378

- Todolí-Signes. (2019). Algorithms, artificial intelligence and automated decisions concerning workers and the risks of discrimination: the necessary collective governance of data protection. Transfer (Brussels, Belgium), 25(4), 465–481. https://doi.org/10.1177/1024258919876416

- Yang, & Dobbie, W. (2020). Equal protection under algorithms: A new statistical and legal framework. Michigan Law Review, 119(2), 291–395.