Online hate speech in nowadays society

In the progressively digitalized modern society, online cyberspace provides people with many opportunities for free speech. People are constantly providing their original texts on the Internet under the influence of the various platform launched. The rapid development of the Internet has brought many benefits and can have many disadvantages. Based on the current social media environment, it is often used to spread violent messages, hate speech and other ideologies.

Online hate speech is a kind of harmful speech by an Internet user against a specific group of people, including different races, colours, national origins, gender, sexual orientation, nationality, religions or political affiliations etc. (Castaño-Pulgarín et al., 2021; Flew, 2021). The issue of race in hate speech is amplified on social media. The anonymity and freedom of Internet expression have created more opportunities for racial hate speech advocates to publish their language and biases. Minority populations are constantly subjected to online bullying and threats from hate speech promoters.

As a medium of text and communication, Internet platforms play an essential role in controlling their content and balancing freedom of expression and hate speech. This blog aims to critically understand how social media govern online hate speech content by using the case study of the governance of BLM’s racist hate speech on Twitter. The hate speech governance of Twitter will be mostly neutral, which allows users to post text with potential hate speech to gain a large number of users, freedom of speech and profits for the platform.

Firstly, the blog will analyse why hate speech appears on general online platforms and social media. Secondly, the case study would focus on hate speech in response to the 2020 BLM tag. A specific example of this is the hate speech on Twitter caused by BLM, the death of George Floyd, toward American Africans. Finally, the approaches and regulation measures of Twitter will be analysed when Twitter faces racist hate speech in this blog.

Hate speech in social media platforms:

The rapid growth of social media with its fast-spreading nature has led to the widespread dissemination of information. An ever-development social media that not only drive freedom of expression on different platforms but also access constantly updated modules for interacting with creators. The act of liking and sharing the users’ interactions would lead information to grow and spread like a snowball. The dissemination of information on the Internet is no longer influenced by geographical factors but is a global explosion. In addition, because of curiosity and sensational headlines, the public is always attracted to disinformation and hate speech and shares it rather than positive information (Samsonov, 2021). So based on the growth pattern of information, the act of sharing and liking on social media plays a role in amplifying hate speech and other statements.

The platform as the mediation would prefer to hold a neutral attitude when they face some aculeated question. It is not only because the platform should guarantee the freedom of expression online, but also because the platform, based on the UGC support, would like to profit from the fierce conflicts (Comninos, 2013). For example, when the Christchurch Mosque shootings happened, the criminal used Facebook Live to conduct a 16-minute webcast. Facebook to win the number of views and users by using its Live during that time. In this case, the platform would gain a large number of comments, and a volume of users and like, which are regarded as powerful chips when they attract advertisers.

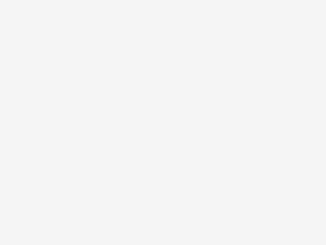

One post in #BLM uses hate speech and GIF(Screenshoot,2022)

However, online hate speech as freedom of expression would harm those niche groups. When hate speech appears on social media platforms, platforms should take appropriate measures at the moment to maintain the online environment and protect vulnerable people. Since a precise balance cannot be defined, platforms and companies are only regulated by the means they benefit. In this case, the terms and rules introduced by different internet platforms are so vague that the platforms have different restrictions on online hate speech, such as racism and different political stances.

Thus, the current management of controversial content is confusing. For example, even though Twitter has stated that hate speech will be banned, users’ insulting and inflammatory posts are still seen in Twitter’s BLM tags; Reddit boosts the topics of toxic subcultures (Chandrasekharan et al. 2017); Users reproduce racism by using emoji or GIFs (Matamoros-Fernández 2018). Based on combining the above social media platform characteristics, the blog will critically analyze the phenomenon of BLM’s hate speech in the context of platform governance of Twitter.

Black Lives Matter tag on Twitter:

Black Lives Matter signs in Boston (Maddie, 2020)

#BLM on TikTok (Screenshoot, 2022)

The racist rhetoric of BLM has been widely publicized, due to conflicts between police officers and African Americans in the United States. George Floyd, an American Africa, died nearly nine minutes after a white police officer knelt on his neck. Before he died, he said he cannot breathe. The murders and legal action against the police officer in charge further divided the country between BLM and anti-BLM groups.

There is no doubt that Floyd’s untimely death strengthened the BLM’s offline online campaign. As the launching platform of the online campaign, it aims to protest and fight against anti-American African racism and police brutality against American Africans (Michaels, 2020; Williams, 2020). TikTok, for example, has 30.9 billion views for #blacklivesmatter (TikTok, n.d). According to the Pew Research Center, #BlackLivesMatter is used 2.7 million times a day (Monica, Michael, Andrew and Emily, 2018).

Regularly, hate speech from white Americans erupts in BLM. Even the White Lives Matter that quickly emerged after the BLM march was a hit topic to attack groups of colour (Park et al., 2021). The same hate speech toward white supremacy and the police. With the development of the tag, the posts on Twitter have become more aggressive for those people, whether the groups are American Africa, white supremacy, or policies. Twitter attempted to govern the content during this period.

Nevertheless, based on its core concepts of free expression, the platform stands on a neutral attitude and use the topic to attract users’ participation. Until today, after many announcements on Twitter banning related hate speech, there is still apparent hate speech on the topic of colour groups. The content of the topics and posts is more targeted at people of colour and is more likely to generate antagonism and incite the masses. Even though the platform’s algorithms and other governance limited the hate speech in text form, the new form of hate speech in the form of images and meme continues.

Reasons why hate speech of BLM appears on Twitter:

The fast-spreading nature of social media Twitter expands the engagement and online interaction of users worldwide. People in different races on the network engage in heated discussions about various topics.

Due to the unrestricted freedom of speech and the anonymity of Twitter, bigoted speech can turn into hate speech on social media against vulnerable racial groups. Even though some hate speeches about people of colour break out, the platform dominated by Western interests is not willing to delete or immediately stop its hate speech from developing (Crosset, Tanner & Campana, 2018). Because Twitter is based on the original UGC, it needs to have many remarks and users to support the particular topics.

When hate speech based on freedom of expression is posted in BLM topics defending black interests, internet platforms are more willing to use the heat of the topic and freedom of expression rights to gain participation from other users. Thus, the platform can make a profit in it as a profiteer (e.g. amount of users attract advertisers and business, etc.). This type of hidden profit is often beneficial to the platform’s development and compliance with the law.

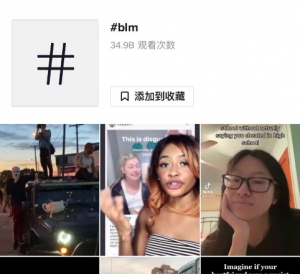

Account has been locked on Twitter(Help Center, n,d)

Moreover, the public notices the minority groups harmed by hate speech. In this case, Twitter publishes many regulation methods focused on the current situation. Under a combination of public and government pressure and social accountability, Twitter has taken steps in response to BLM’s hate speech.

For example, issuing relevant statements prohibiting racist hate speech, blocking accounts with prominent hate speech or other forms of hate speech (Twitter Help, n.d.); reducing the visibility of users of hate speech posts, temporarily locking accounts, deleting tweets, or suspend (ban) posting users (Jiménez D, 2022).

For the users, Twitter also affords the right to the public for regulating hate speech. For example, Twitter provides users with tools against hate speech, such as warnings, reporting tools and blocklists, which allow users to block, or report hate speech on their own. The algorithms of Twitter and regulation technology are constantly being updated. For example, during the disinformation outbreak, Twitter worked with technology companies to develop an automated detection system to proactively select content for review by administrators without user reporting (Bartolo, 2021). To detect online hate speech, machine learning algorithms such as Google’s Perspective have been created (Siapera, Moreo, & Zhou, 2018).

Twitter has used automated moderation more frequently in its regulation systems. With the development of content moderation would create a few advertising revenues in a profit-oriented platform (Jiménez, 2022). In this case, Twitter’s regulations measures show that Twitter is indeed taking a strong regulation against hate speech to maintain a regulatative Internet speaking environment on the platform.

Ambiguous definition of content governance and freedom

Twitter and Memes(Kachalin, 2020)

However, in racist hate speech, memes on Twitter are not included in the scope of hate speech that would be regulated. The content governance and algorithms of platforms need to recognise multiple forms of text in order to better maintain the overall online environment.

Online hate speech is presented in four main forms: threats of violence, slander and metaphor, graphic forms and geographic differences (Ditch The Label, n.d.). The racist hate speech is presented mostly in memes and humorous pictures(Ditch The Label, n.d.). On Twitter, “humorous” hate speech in the form of images or memes is safe(Matamoros-Fernández, 2017). The vague qualification of free speech and policy on Twitter can be understood in terms of the platform’s inclusiveness of memes.

A large number of studies have generally concluded that meme is contrary to the assumptions that it is humorous, and that the internet and its users do not innately associate meme with humour. According to the research of Dynel & Poppi (2021), it was shown that non-humorous memes accounted for a more significant proportion of memes than humorous memes in topics supporting BLM. The anti- BLM memes exhibit the ideology of white supremacy. In this case, users use non-humorous to change the traditional textual approach to hate speech by satirising or ridiculing special groups in the form of images.

Even if an insulting meme appears in a BLM or other racist topics, The regulation of Twitter and its algorithms do not consider it within the scope of hate speech. And in the case of the context potential meaning change via meme, Twitter holds a neutral perspective in allowing the statement to appear (Williams, 2020). It is not considered to be inflammatory or hateful.

So even though Twitter has made a clear announcement to ban hate speech and control the text content, users can use memes with hate speech to bring the two opposing sides into dispute which provides the amount of user usage of the platform. Twitter would win the profits from the argument. Thus, the limitations of the text form about online hate speech are not definite. Even with all the content controls, racism is still taking on a new form in front of the public.

Conclusion:

Twitter is a platform that encourages freedom of expression among its users. In terms of rapid distribution of information and user interaction, Twitter realises the characteristics of social media, which aim to amplify its advantages.In response to the emergence of hate speech on BLM and other tags, Twitter has issued a public statement that reminds users of the prohibition and consequences of posting relevant content and has also given users the power to report content regulation on the platform. Twitter would benefit from a brief period of unregulated discussion on topics related to hate speech, which can draw the attention of more users.

However, due to the ambiguity between the free speech rights of hate speech and the control of content on the platform, Twitter has not been transparent in fully addressing the issue of hate speech from the beginning. Moreover, as the form of the text in racist areas continues to change, Twitter’s inclusiveness of memes has led to the creation of yet another type of racist hate speech.

Reference list:

Castaño-Pulgarín, A., Suárez-Betancur, N., Vega, T., & López, H. (2021). Internet, social media and online hate speech. Systematic review. Aggression and Violent Behavior, 58, doi: 101608. https://doi.org/10.1016/j.avb.2021.101608

Chandrasekharan, E., Pavalanathan, U., Srinivasan, A., Glynn, A., Eisenstein, J., & Gilbert, E. (2017). You Can’t Stay Here: The Efficacy of Reddit’s 2015 Ban Examined Through Hate Speech. Proceedings of the ACM on Human-Computer Interaction,31(1), 1-22.

Comninos, A. (2013). The Role of Social Media and User-Generated Content in Post-Conflict Peacebuilding [Working Paper]. World Bank. , Washington, DC. © World Bank. Retrieved from https://openknowledge.worldbank.org/handle/10986/23844

Crosset, V., Tanner, S., & Campana, A. (2018). Researching far right groups on Twitter: Methodological challenges 2.0. New Media & Society, 21(4), 939–961.

Ditch The Label. (n.d.).Uncovered: Online Hate Speech in the Covid Era. Retrieved March 30, 2022, from https://www.brandwatch.com/reports/online-hate-speech/view/

Dynel, M., & Poppi, F. I. M. (2021). Fidelis ad mortem: Multimodal discourses and ideologies in Black Lives Matter and Blue Lives Matter (non)humorous memes. Information, Communication & Society, 0(0), 1–27.

Flew, Terry (2021) Regulating Platforms. Cambridge: Polity, pp. 91-96.

Haley, Z.(2020). Hate Speech: How to Talk to Your Child About Acts of Violence. Retrieved from Bark: https://www.bark.us/blog/acts-of-violence-hate-speech/

Jiménez Durán, R. (2022). The Economics of Content Moderation: Theory and Experimental Evidence from Hate Speech on Twitter. Social Science Research Network.

Kachalin, P. (2020) How will the new Twitter Rules affect Memes?. Retrieved April 05, 2022 from https://news.knowyourmeme.com/news/how-will-the-new-twitter-rules-affect-memes

Maddie, M. (2020). Protesters carrying Black Lives Matter signs at a demonstration against police brutality in Boston. Retrieved from Britannica website: https://www.britannica.com/topic/Black-Lives-Matter

Matamoros-Fernández, A. (2017). Platformed racism: The mediation and circulation of an Australian race-based controversy on Twitter, Facebook and YouTube. Information, Communication & Society, 20(6), 930–946.

Matamoros-Fernández, A. (2018). Inciting Anger through Facebook Reactions in Belgium: The Use of Emoji and Related Vernacular Expressions in Racist Discourse. First Monday 23 (9): 1–20.

Michaels, N. (2020). Posting about BLM Made it a Movement Pushing for Real Change. Pop Culture Intersections. 53: 1-20.

Monica, A., Michael, B., Andrew, P., & Emily, V. (2020). #BlackLivesMatter surges on Twitter after George Floyd’s death. Retrieved from Pew Research Center: https://www.pewresearch.org/fact-tank/2020/06/10/blacklivesmatter-surges-on-twitter-after-george-floyds-death/

Park, J., Francisco, C., Pang, R., Peng, L., & Chi, G. (2021). Exposure to anti-Black Lives Matter movement and obesity of the Black population. Social Science & Medicine, 8:1-10. https://doi.org/10.1016/j.socscimed.2021.114265

Samsonov, A. (2021). Can social media limit disinformation?. Quantitative Social and Management Science Research Group. 1-31.

Siapera, E., Moreo, E., & Zhou, J. (2018). HateTrack: Tracking and monitoring racist speech online. Retrieved from: https://www.ihrec.ie/documents/hatetrack-tracking-and-monitoring-racist-hate-speech-online/

TikTok. (n.d.). #blacklivesmatter. Retrieved March 30, 2022, from https://www.tiktok.com/tag/blacklivesmatter?lang=en.

Twitter Help.(n.d.). Twitter’s policy on hateful conduct. Retrieved March 30, 2022, from https://help.twitter.com/en/rules-and-policies/hateful-conduct-policy

Twitter Help.(n.d.). Help with locked or limited account. Retrieved April 05, 2022 from https://help.twitter.com/en/managing-your-account/locked-and-limited-accounts

Williams, A. (2020). Black Memes Matter: #LivingWhileBlack With Baeky and Karen. Social Media + Society, 6(4). https://doi.org/10.1177/2056305120981047